Portal: advanced documentation

The sections below contain advanced information on the different CREW testbeds. For information on the benchmarking platform, please consult the section of the w-iLab.t testbed on benchmarking. You can use the menu on the left of this website to navigate through the portal. You can use the menu on the left of this website to navigate through the portal.

The information on the portal will be regularly updated as additional information and cognitive components become available.

Basic tutorial: your first experiment on w-iLab.t office testbed

Run your first experiment on w.iLab-t office testbed

!! THIS INFORMATION ONLY APPLIES TO THE w-iLab.t Office testbed. !!!

Up to date documentation on the w-iLab.t Zwijnaarde testbed can be found at http://doc.ilabt.iminds.be/ilabt-documentation/wilabfacility.html

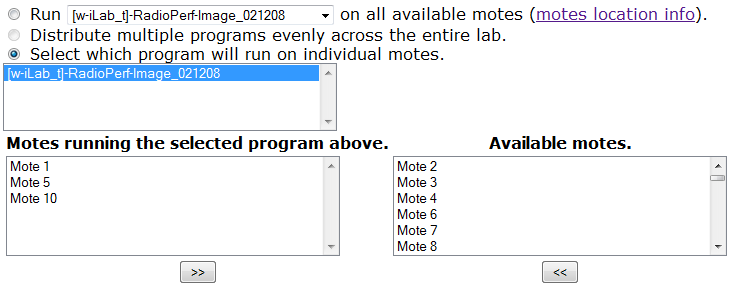

In this basic tutorial you will learn how to run your first sensor experiment on Wilab.t. The sensor code we will use for this experiment is called RadioPerf. This application is able to send commands over the USB channel to the mote (e.g. start sending radio packets of x bytes to destination y). The mote also periodically sends reports back over the USB channel (e.g. how many packets it received, what the RSSI of the received packets was, ...). In this tutorial you will learn how to tell a sensor node to start sending packets and afterwards analyze the the result with one of the Wilab.t tools. Important note: the program and class files used in this tutorial are programmed/generated in a TinyOS environment. If you are not familiar with TinyOS, it is strongly advised to check out the TinyOS tutorials. Note that the class files are explained in tutorial 4.

Send an e-mail to  to request an OpenVPN account for the w.iLab-t testbed. Be sure to also mention your affiliation and/or project for which you want access to the testbed. We recommend downloading the VPN software from the OpenVPN website. Once you installed the software and received the necessary certificates and credentials, you should be able to connect to the w.iLab-t testbed. Make sure you run the OpenVPN software as Administrator/root !

to request an OpenVPN account for the w.iLab-t testbed. Be sure to also mention your affiliation and/or project for which you want access to the testbed. We recommend downloading the VPN software from the OpenVPN website. Once you installed the software and received the necessary certificates and credentials, you should be able to connect to the w.iLab-t testbed. Make sure you run the OpenVPN software as Administrator/root !

-

Request w-iLab.t account

-

Create your first job

-

Schedule your first job

-

Analyze results

-

Visualize experiment

-

Schedule parametrized experiment

Now that you're able to connect to the w-iLab.t web interface, you can request an account on the w-iLab.t Office testbed by completing the form on the signup page. You can register for the w-iLab.t Zwijnaarde testbed by clicking "Request Account" on the w-iLab.t Zwijnaarde home page.

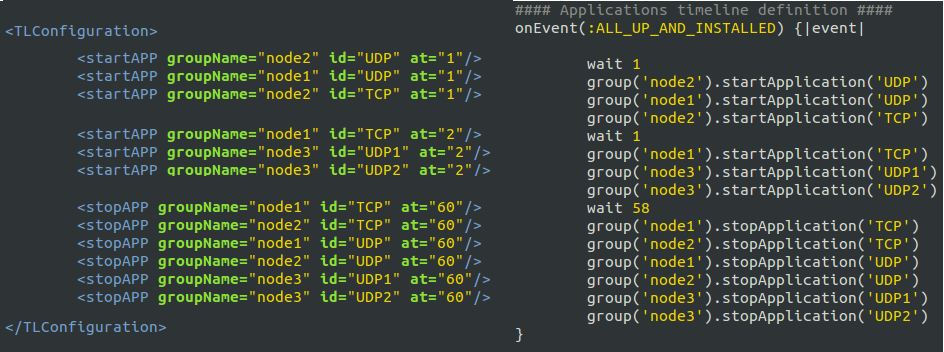

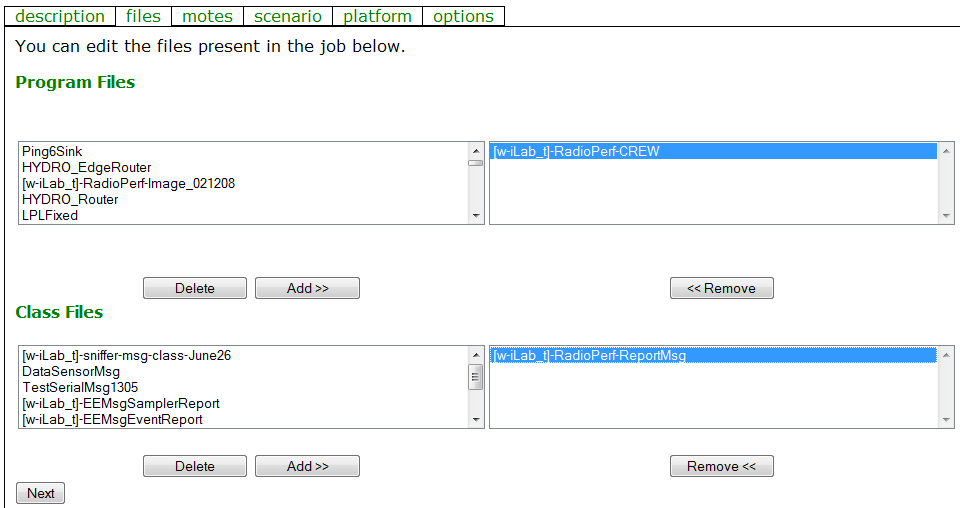

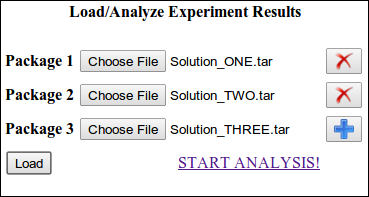

Once your account has been approved, you can log in to the w.iLab-t Office testbed. (The following describes your first job on the Office testbed. If instead you want to use the w.iLab-t Zwijnaarde testbed, tutorials are available at the w-iLab.t Zwijnaarde info page (10.11.31.5). After registration on the w-iLab.t Zwijnaarde home page, you should first take a look at the Emulab-OMF tutorial and reserve your nodes here. Now go to the job page to create your first job. Click the Create new job button and fill in a name and description. Click next or go to the files tab. In the files tab you must select at least one Program file and one Class File. The Program File contains the firmware that will be programmed on the sensor nodes. Sensor nodes can send messages to the w.iLab-t server which will be logged in the database. The Class Files define which messages, that are sent by the sensor node, will be logged in the database. For our first job, we will use the RadioPerf-CREW image as Program File and the RadioPerf-ReportMsg as Class File. Select them in the list on the left and click the Add>> button.  At the bottom of the files tab, it is possible to upload your own images and class files. Click Next or go to the motes tab. You can choose to run the firmware on all available sensor nodes, or pick some specific nodes out of the list. For our first experiment, we can just run the experiment on all available sensor nodes.

At the bottom of the files tab, it is possible to upload your own images and class files. Click Next or go to the motes tab. You can choose to run the firmware on all available sensor nodes, or pick some specific nodes out of the list. For our first experiment, we can just run the experiment on all available sensor nodes.  The scenario and platform tabs are not important for our first experiment, so just click the Submit button at the bottom of the page.

The scenario and platform tabs are not important for our first experiment, so just click the Submit button at the bottom of the page.

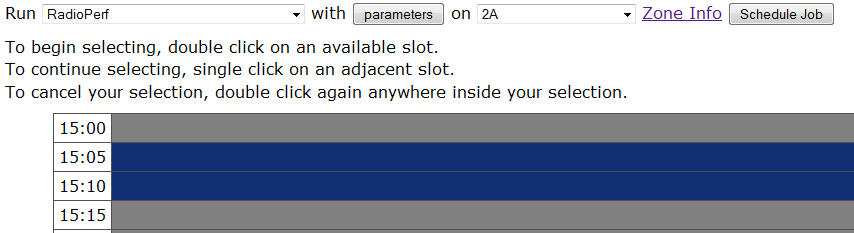

Now that we created our first job, we can schedule it to be executed on the testbed. On the schedule page select the job you want to execute and select a zone (part of the testbed) in which you want it to run. (Choose between 1A/1B/2A/2B/3A/3B). Now double click the first time slot where you want the experiment to start and select some consecutive blocks to determine the duration of the experiment. For this first experiment, 10 minutes should suffice. Click Schedule Job to confirm the selection.

To analyze the results of your experiment (during or after), you can log in to your personal database via the user info page. You should take note of your wilab Database Name which is listing near the top of the page (this is NOT your email-adres). After clicking the phpmyadmin link, you can fill in your username and password. On the left side of the phpmyadmin page you see some general databases and one database which is named after your own user name. Click this database to see what tables it contains. For every job, there should be a table in the database (if it logged any info). Click the browse icon to see all the info your experiment has logged.

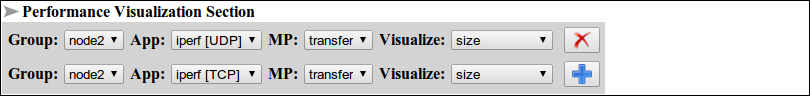

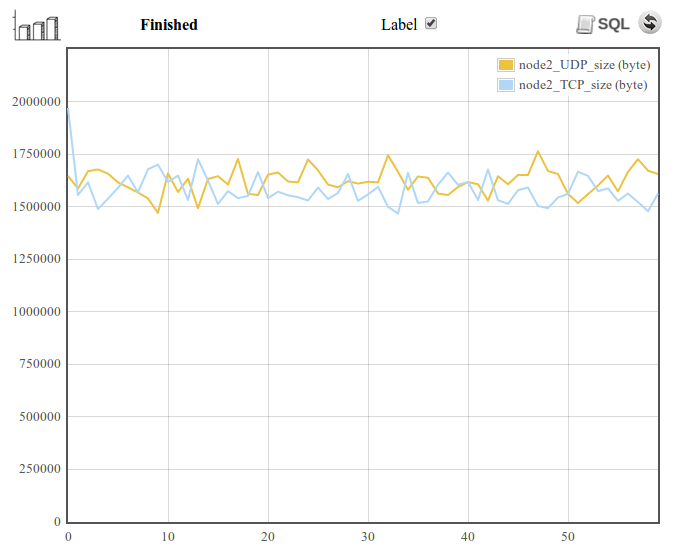

The<

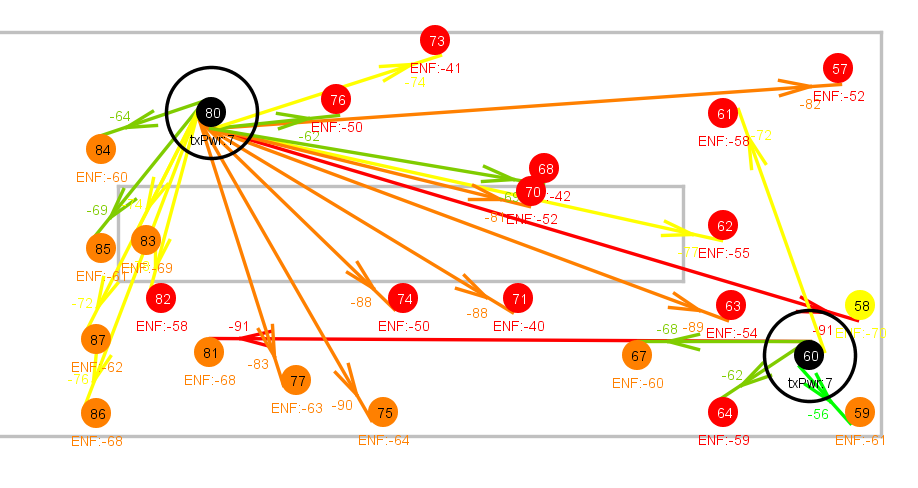

toolbox page contains a list of analyzer and visualizer XML files. Select the [w-iLab_t]_Visual_RadioPerf XML file and click Start Visual to start the Java applet that will visualize your experiment. Now fill in your database user name (not email) and password. You will need to install the sun-java6-plugin to get the applet working. The applet will NOT load with the alternative OpenJDK plugin (IcedTea). The applet has been tested in both Firefox and Internet Explorer. Once the applet has finished loading, you should now see a blue circle with the sensor node id for every active node in your experiment. After some time, every node should log some info to the database and the circles should change color and now also show the estimated noise floor (ENF). In the next step we will show how we can modify the experiment by changing some parameters.

In this step we want to schedule the same job, but change some parameters so that one node will broadcast (single hop) some data packets to all nodes in its neighborhood. Therefore we go back to the

schedule page , select the job we want to run and then click the parameters button. Now look for all parameters starting with RadioPerfP. The default values can be used except for the source parameter. If we want one node to transmit packets to all other nodes (destination value 65535 equals broadcast), we must change this to the id of the transmitting node (e.g. 80 if we run the experiment on zone 2A).  Now choose some time slots, select a zone and click the Schedule Job button. Repeat Step 6 to visualize the parametrized experiment. You should now see arrows from the sending node to all receiving nodes, with an RSSI indication next to the arrows.

Now choose some time slots, select a zone and click the Schedule Job button. Repeat Step 6 to visualize the parametrized experiment. You should now see arrows from the sending node to all receiving nodes, with an RSSI indication next to the arrows.

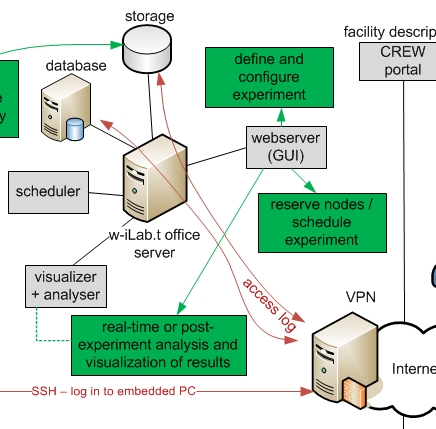

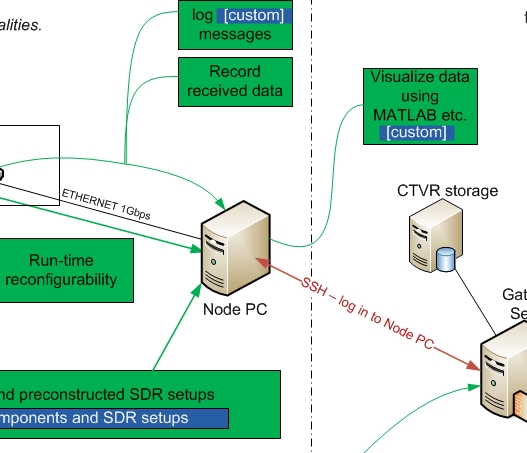

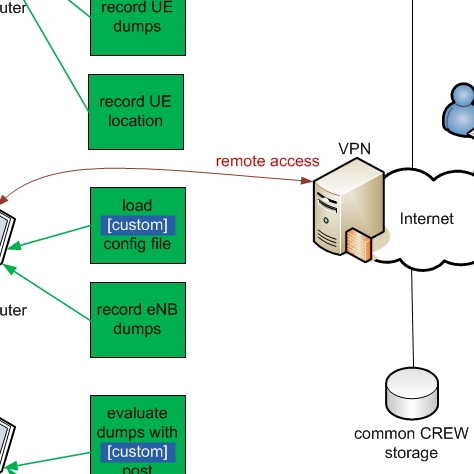

Schematic overview

Please click the thumbnail extracts below to get a full screen view of the different infrastructures. After clicking the thumbnails, click to zoom in.

The images may also be downloaded on the bottom of this page.

to zoom in.

The images may also be downloaded on the bottom of this page.

| TWIST - Berlin | w-iLab.t - Gent | Iris - Dublin | LTE-Advanced - Dresden | Log-a-tec - Ljubljana |

Hardware overview |

Hardware overview |

Hardware overview |

Hardware overview |

Hardware overview |

Usage overview |

Usage overview |

Usage overview |

Usage overview |

Usage overview |

| Access documentation | Access documentation | Access documentation | Access documentation | Access documentation |

| Attachment | Size |

|---|---|

| wilab-UsageOverview.png | 158.04 KB |

| wilab-HardwareOverview.png | 183.3 KB |

| TWIST-UsageOverview.png | 119.91 KB |

| TWIST-HardwareOverview.png | 114.63 KB |

| LTE-UsageOverview.png | 88.02 KB |

| LTE-HardwareOverview.png | 188.22 KB |

| vesnaHardwareOverview_v3-s.jpg | 1.48 MB |

| IrisTestbedHardwareOverviewY2_v1.1.jpg | 402.97 KB |

| IrisUsageOverview_v2.jpg | 482.33 KB |

IRIS documentation

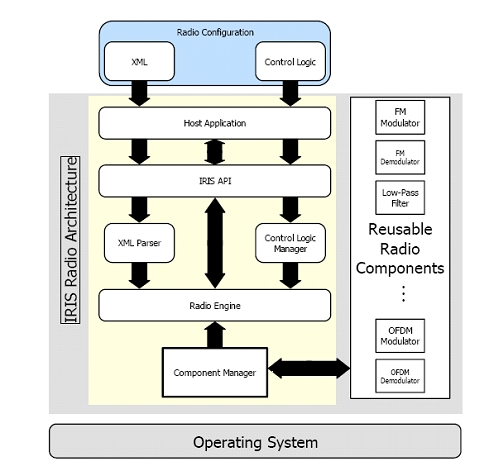

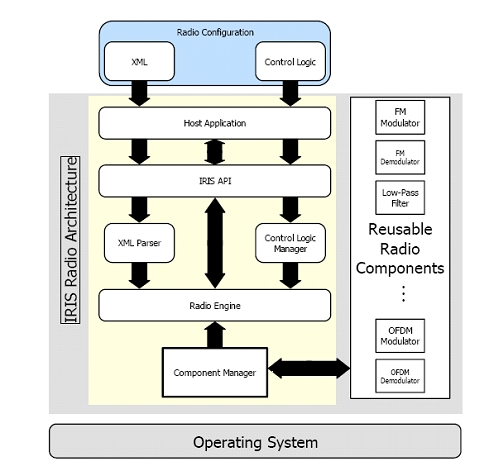

The reconfigurable radio consists of a general-purpose processor software radio engine, known as IRIS (Implementing Radio in Software) and a minimal hardware frontend. IRIS can be used to create software radios that are reconfigurable in real-time.

Please use the links below to learn more about how Iris can be used.

Testbed Description

Iris is a software radio architecture that has been developed by CTVR, The Telecommunications Research Centre at TCD, Written in C++, Iris is used for constructing complex radio structures and highly reconfigurable radio networks. Its primary research application is to enable a wide range of dynamic spectrum access and cognitive radio experiments. It is a GPP-based radio architecture and uses XML documents to describe the radio structure. This testbed provides a highly flexible architecture for real-time radio reconfigurability based on intelligent observations the radio makes about its surroundings.

Each radio is constructed from fundamental building blocks called components. Each component makes up a single process or calculation that is to be carried out by the radio. For instance, a component might perform the modulation on the signal or scale the signal by a certain amount. Each component supports one or more data types and passes datasets to other components, along with some metadata such as a time stamp and sample rate. There is a data buffer between each component to ensure the data is safe, even if one component is processing data much faster than another.

All components within the radio exist inside an engine. An engine is the environment in which one or more component operates. Each engine defines its own data-flow and reconfiguration mechanisms and runs one or more of its own threads. As with components, each engine is linked by a data buffer. Iris currently features two data types, the PN Engine and the Stack Engine. The PN engine is typically used for PHY layer implementations and is designed for maximum flexibility. It has a unidirectional data flow and runs one thread per engine. The Stack Engine is designed for the implementation of the network stack architecture, where each component is a layer within the stack and runs its own thread of execution. It also has a bidirectional data flow.

Iris’s capability to reconfigure the radio on the fly lies in the controllers. A controller exists independently of any engine and runs in its own thread of execution. A controller subscribes to events within components and reconfigures parameters in other components based on the observation of these events. For instance, a controller could be set up to observe the number of packets passing through a certain component and, upon reaching a certain number of packets, change the operating frequency of the radio.

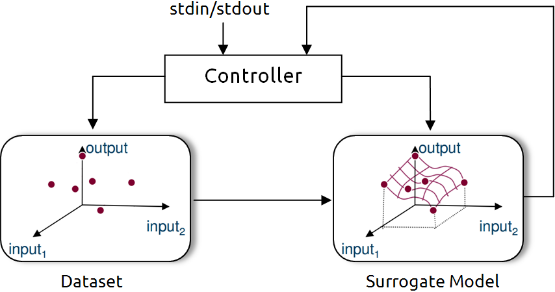

The Iris 2.0 architecture is illustrated in the following figure.

A radio is constructed and configured using XML documents. Each component is named and has its inputs, outputs and exposed parameters explicitly specified. Engines are declared and components are placed in their relevant engines. Controllers are then declared at the top of the XML document and the links between each component are declared at the end of the document.

The layout of the testbed hardware can be found here .

The testbed can be accessed directly here .

Apply for an account

Before gaining access to the Iris testbed it is essential to familiarise yourself with the Iris software. This is done through the Iris Wiki page. The Wiki gives you full instructions on how to download and install the Iris software onto your own computer as well as instructions on how to get started in using it. Use of the Wiki page requires a user account and password. These can be obtained through emailing either tallonj@tcd.ie or finnda@tcd.ie.

An overview of the Iris architecture and some of its capabilities are available here .

At this stage users will be able to performe experiments using Iris, independent of the Iris testbed, using either the simulated "channel component" or in conjunction with the USRP (1/2/N210 etc.).

Access to the Iris testbed is given out separately from access to the Wiki. This is because access to the Iris testbed is often not necessary if users have USRP hardware of their own available.

However, if remote access to the Iris testbed (after installing and trying out the Iris software) is required, details of how to obtain access to the testbed can be found here.

Remote access

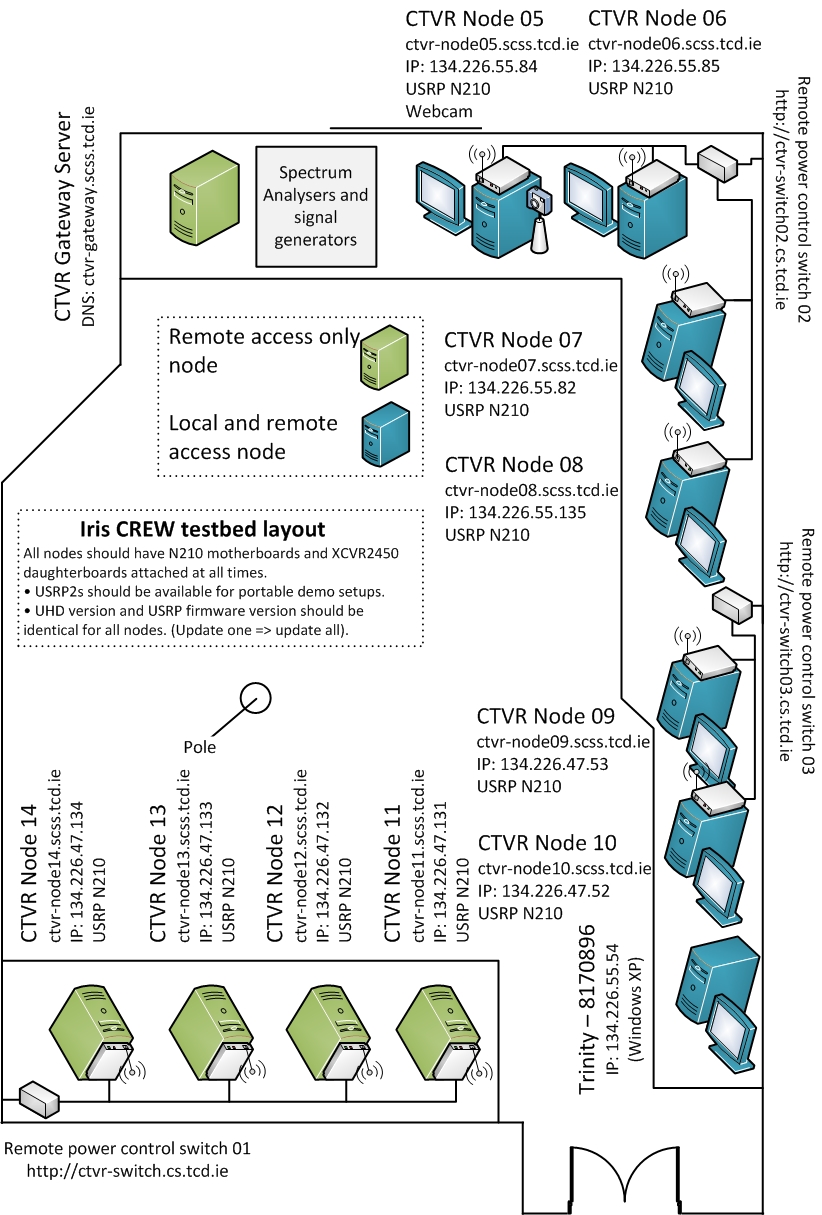

The CTVR Iris testbed is currently being reconfigured. The node locations may not reflect exactly those shown in the diagram. For downtimes make sure to check the calendar.

The testbed is designed to permit fully remote access for carrying out experiments. This page provides information required to use the testbed from a remote location.

Access to the Iris testbed is achieved through the ctvr-gateway server. User login details are required to gain access to this server. These can be applied for by emailing either tallonj@tcd.ie or finnda@tcd.ie explaining the nature of the experimentation desired to be carried out within the testbed. Due to limitations in the number of nodes available applications must be handled on a case by case basis.

Once login details have been obtained

The experimenter will need to schedule an experiment.

Remote VNC Access

The experimenter will then be able to access any of the testbed nodes via VNC in the following way. Use the login details you were issued to login to ctvr-gateway.cs.tcd.ie via SSH. Once you have a terminal for this server open, SSH again onto the node you wish to access as follows:

ssh nodeuser@ctvr-node07.cs.tcd.ie

vncserver :1 -geometry 1280x900

This will create a vncserver on display 1 of node 07 and with a 1280x900 screen resolution. Once the server is running, use a VNC client to connect. In this case, we would connect to ctvr-node07.cs.tcd.ie:1. When you are finished, kill the VNC server on the testbed node as follows:

vncserver -kill :1

The following pages will be of use in setting up an experiment:

| Iris architecture overview | Iris Testbed Layout | Powering the USRPs remotely |

|---|---|---|

|

|

|

| Spectrum analyser remote access | First example experiment | Use fo the testbed webcam |

|

|

|

Use of licensed bands

For use of wireless spectrum outside of unlicensed bands the experimenter is directed here.

| Attachment | Size |

|---|---|

| Iris Experiment.jpg | 79.47 KB |

| webcam_symbol.png | 6.43 KB |

First example experiment

The full installation instructions for iris can be found at:

https://ntrg020.cs.tcd.ie/irisv2/wiki/ExampleExperiments

The wiki contains information on how to install iris on both Windows and Linux OS.

As well as information on how to run a radio and on the test bed in general.

In this sample experiment we will run a simple radio and then adapt a component and add a controller, with a view to exploring the basic functionality of both. The steps a researcher should follow to complete the experiment are outlined below.

1. Follow the instructions outlined in the wiki to run radio, OFDMFileReadWrite.XML

2. If this radio is functioning correctly, “radio running” will appear on the command line.

3. To add a controller to the radio, we must first create an event in one of the components to which the controller can subscribe. To do this, open the shaped OFDM modulator and register an event in the constructor function.

4. Once the event is registered we must create a condition that must be satisfied for the event to be activated. To do this, open the “process” function (as this is where all the calculations are carried out) and specify a condition that activates the controller whenever, for example, 100 packets have passed through.

5. Once this has been done the controller can be made. Open the “example” controller; this gives us a template to work with.

6. Within the controller we must do two things, subscribe to the event that has been set up in the component and specify the parameter that we wish to change as well as the value we wish to change it to.

7. To change the parameter, we specify the name of the parameter as well as the component and engine that it is in. These are assigned in the “ProcessEvent” function.

8. The logic that dictates what the parameter is changed to also goes in this function.

9. Recompile all the relevant code, include the controller in the XML file and run the radio as before.

If the radio is running properly, you should see the event being triggered on the command line and the new value of the parameter in question.

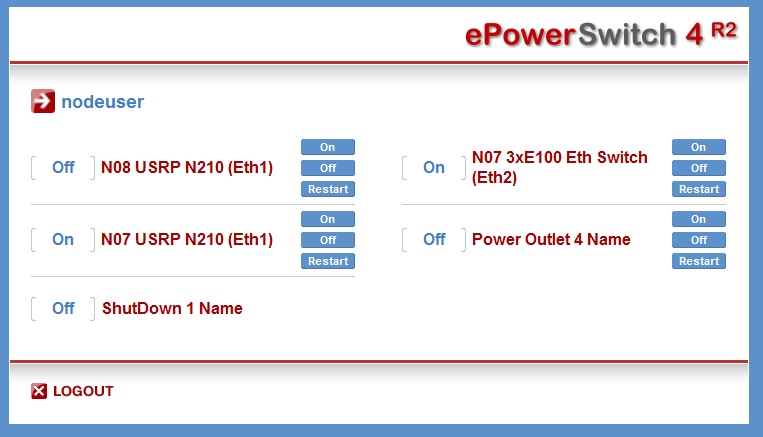

Control of the USRP remote power switches

Powering the USRPs

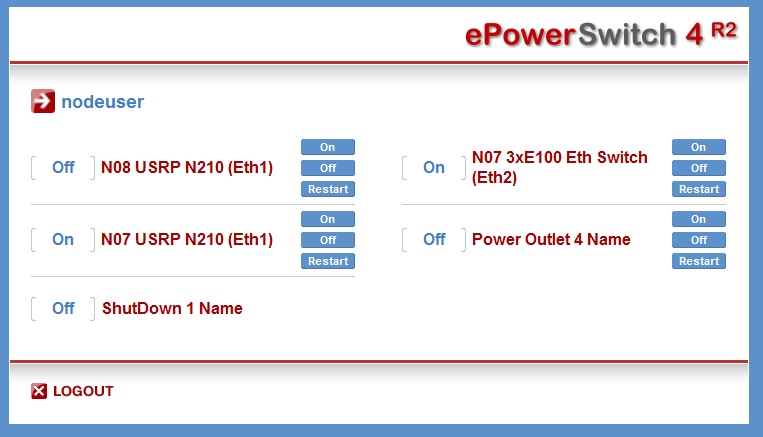

In the testbed we have installed two remote power switches which allow us to remotely power each of the USRPs on and off. To access the remote power switches you must first be logged into one of the testbed nodes. Details on obtaining access can be found here. These switches can be controlled through web interface.

Access the switches by navigating to

or

http://ctvr-switch02.cs.tcd.ie

in the web browser of one of the testbed nodes (again, the power switch interfaces can only be accessed from the testbed nodes). On doing so you will see a similar interface to the following:

The login details are identical to those used to access the nodes themselves. Here, you can power the USRPs for each node on and off. Please remember to power USRPs off when you have finished using them. The positioning of the different testbed nodes as well as the spectrum analyser and signal generator can be found here .

Powering the USRPs via command line/scripts

The remote switch can also be accessed via HTTP Post commands, using a tool such as curl, or equivalent calls in a script or program. Using a UNIX based system with curl installed

curl --data 'P<port>=<command>' http://nodeuser:ctvrnodepass@ctvr-switch.cs.tcd.ie/cmd.html

will alter the state of socket <port> according to <command>.

<port> choices are as follows:

For switch http://ctvr-switch.cs.tcd.ie

* 1 - Node 08 USRP N210 (ETH1)

* 2 - Node 08 USRP N210 (ETH1)

* 3 - Node 05 USRP N210 (ETH1)

* 4 - Node 06 USRP2 (ETH1)

For switch http://ctvr-switch02.cs.tcd.ie

* 1 - Currently unspecified

* 2 - Currently unspecified

* 3 - Currently unspecified

* 4 - Currently unspecified

<command> choices are:

* 0 - Switch Off

* 1 - Switch On

* t - Toggle state

* r - Restart

Commands to multiple ports can be strung together using ampersands, as per the following example:

curl --data 'P0=r&P1=r&P2=r' http://nodeuser:ctvrnodepass@ctvr-switch.cs.tcd.ie/cmd.html

| Attachment | Size |

|---|---|

| e-power switch.jpg | 63.91 KB |

Iris Testbed Layout

The CTVR Iris testbed is currently being reconfigured. The node locations may not reflect exactly those shown in the diagram. For downtimes make sure to check the calendar.

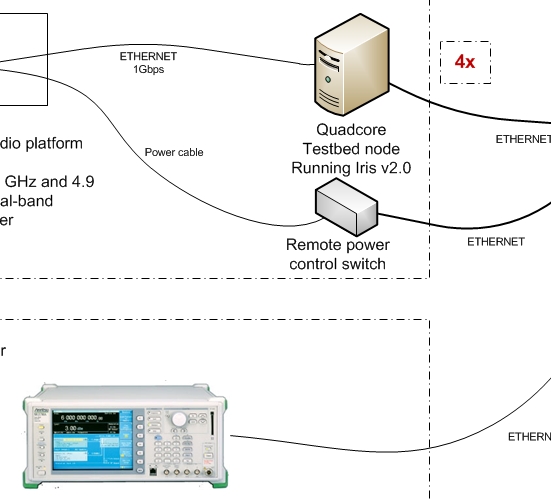

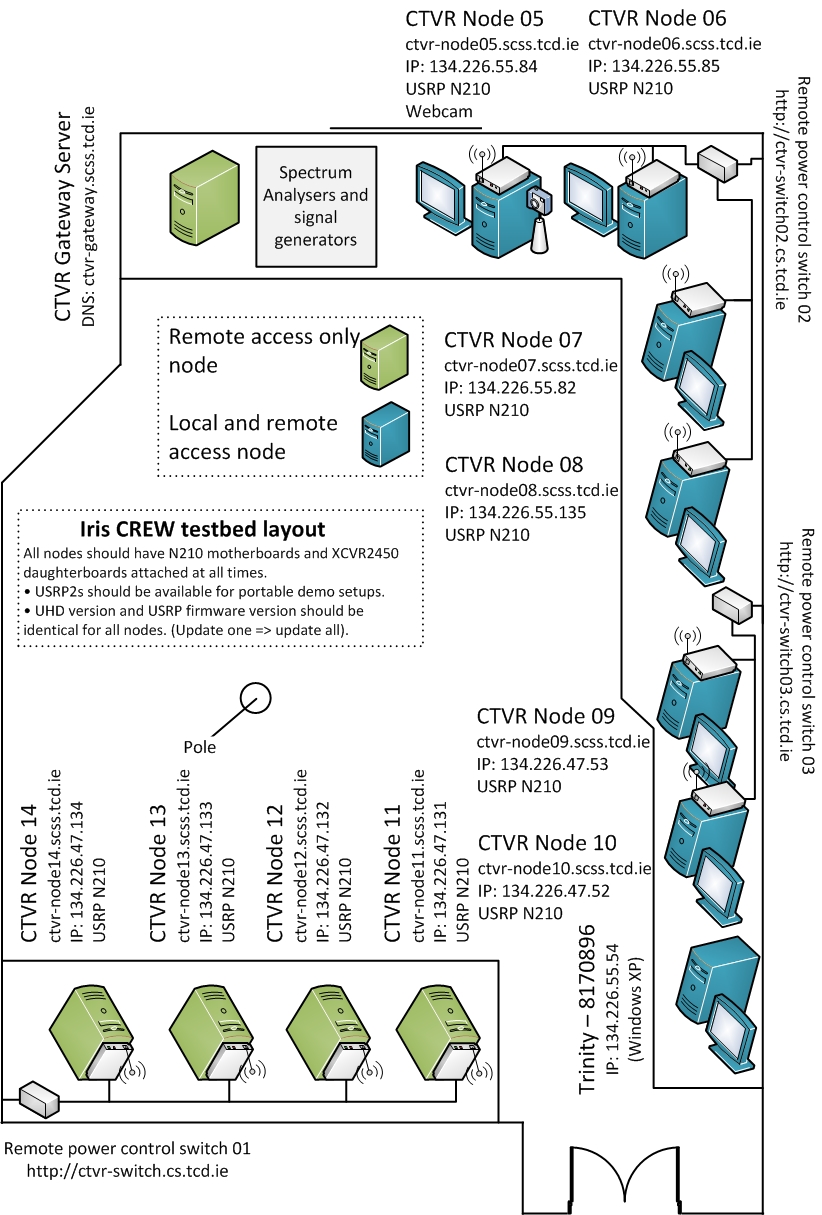

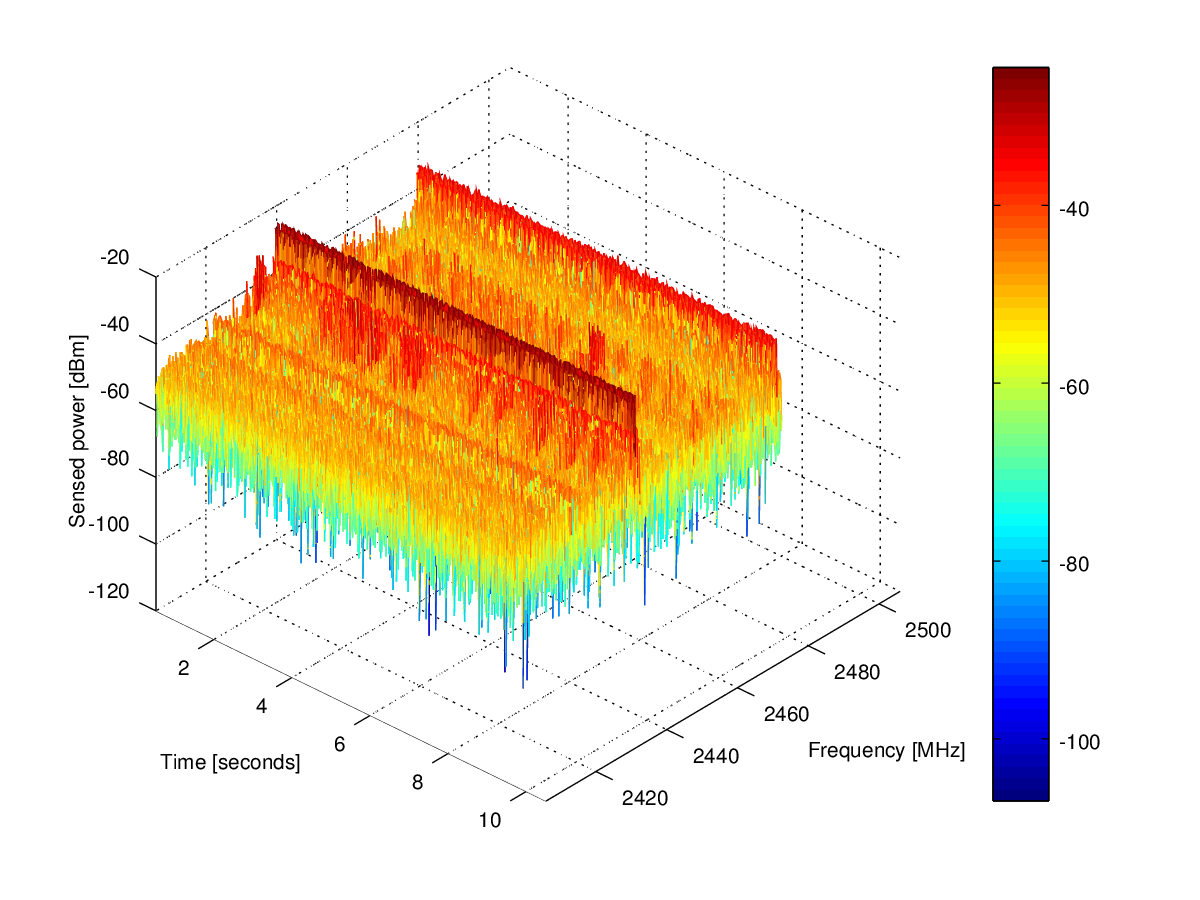

The testbed is laid out as follows. Currently there are 8 remotely accessible Quad core machines each equipped with a USRP2/N210 and an XCVR2450 daughterboard. The USRP2s have a 24 MHz bandwidth (using Gigabit Ethernet to communicate between the USRP and the computer). The daughterboards are capable of transmitting between 2.4 and 2.5 GHz and also between 4.9 and 5.9 GHz.

The testbed diagram shows the "fixed" testbed layout; however, a some "custom" layouts may also be accommodated for specific experiments.

Additionally, before running an experiment on the testbed, experimenters must gain login details for the ctvr-gateway node, through which all of the other testbed nodes are accessed. Information on applying for login details can be found here. Experiments must also previously be scheduled on the testbed calendar, details of which can be found here.

| Attachment | Size |

|---|---|

| Testbed_Diagram_v5.2.jpg | 485.72 KB |

Iris Testbed best practices

1. Everything in the testbed has an exact place

· Each USRP and node has been assigned a table and number.

· Unused daughterboards will be placed in proper storage places.

2. Everything goes back to the exact place after any experiment that causes it to be moved.

3. Clonezilla is used on all nodes meaning that nodes will be reset to a specific version of IRIS on startup.

4. Bearing this in mind everyone should take care to store data in the data partition and not elsewhere on a node as it will be lost otherwise.

5. The firmware in the USRPs will be updated when a new release becomes stable. All hardware will be updated at once rather than a subsection of hardware.

6. If it is found that any piece of equipment gets broken, or if there is an issue with its functionality (e.g. only works for a certain bandwidth or really low powered) the IRIS testbed users mailing list iris-testbed-users@scss.tcd.ie must be informed. This will be relayed this to the wider group and a note will be made of this on the appropriate wiki pages https://ntrg020.cs.tcd.ie/irisv2/wiki/TestbedInventory.

7. All experiments must be scheduled using the Google calendar <ctvr.testbed> specifying all of the following:

· Name of experimenter

· Date and time of booking

· Testbed node number(s)

· Daughtboard(s) of use

· Frequency range(s) of use

8. The testbed should not be used for simulations.

9. The testbed room should be kept secure.

10. Testbed users should sign up to the following mailing lists:

· IRIS support mailing list https://lists.scss.tcd.ie/mailman/listinfo/iris2

· IRIS testbed users mailing list https://lists.scss.tcd.ie/mailman/listinfo/iris-testbed-users for enquiries regarding the Iris testbed.

· IRIS commit mailing list https://lists.scss.tcd.ie/mailman/listinfo/iris2commit for commit notifications.

11. Short descriptions of all experimental work using the testbed should be provided in the projects section of the IRIS wiki https://ntrg020.cs.tcd.ie/irisv2/wiki/ActProjects.

Scheduling an experiment

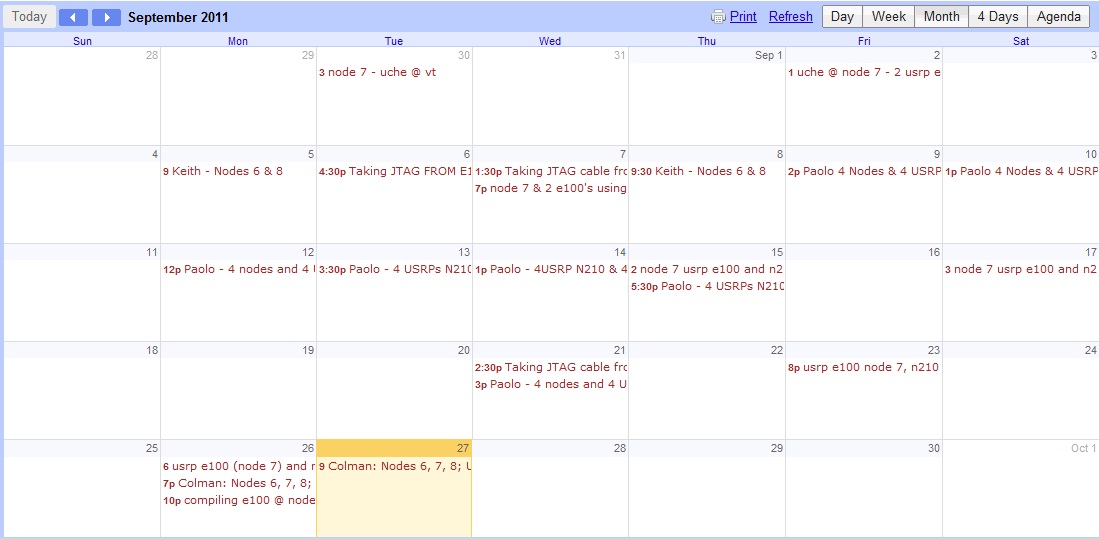

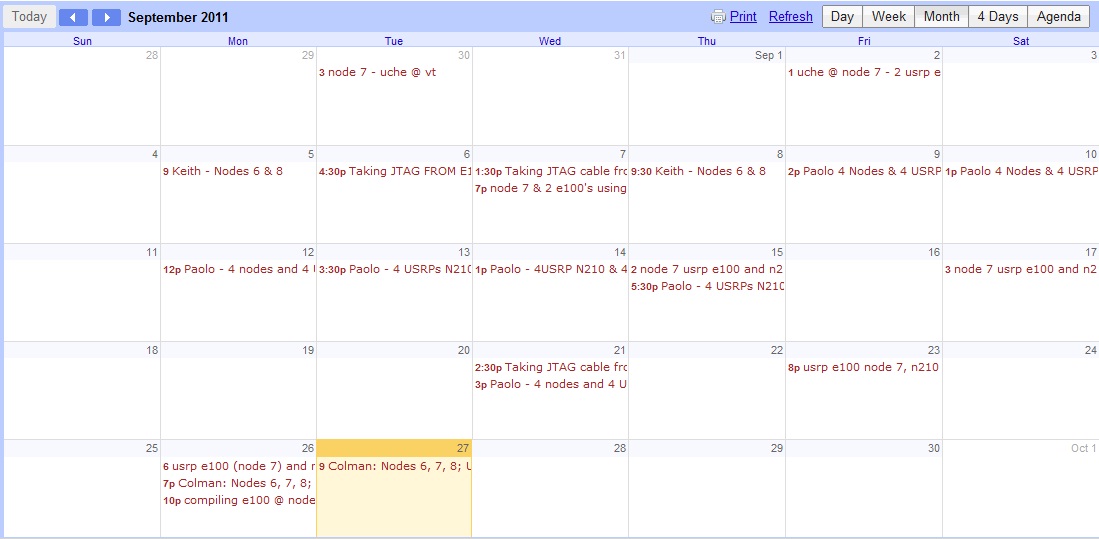

On receiving testbed login details, the experimenter will also be issued with access to the Google calendar used for scheduling experiments. It is essential to schedule experiments, specifying:

* Which nodes

* Number of USRPs/daughterboards

* Frequencies of operation

* If the spectrum analyser/signal generator is also needed

* Your name

prior to use of the testbed.

An example shot of the calendar is shown below.

| Attachment | Size |

|---|---|

| ctvr_testbed_google_calendar.jpg | 101.79 KB |

Spectrum analyser remote access

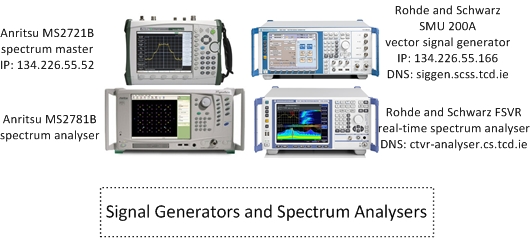

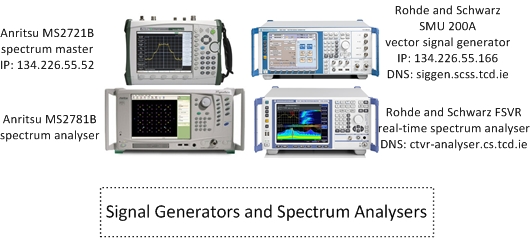

The main spectrum analyser in the testbed room is a Rohde & Schwarz FSVR real-time analyser.

- Host name: ctvr-analyser.cs.tcd.ie

- IP address: 134.226.55.156

- Frequency range: 10Hz - 7GHz

- Frequency range: 10Hz - 7GHz

- Support for IQ analysis (inc. OFDM)

- Maximum sampling rate for IQ acquisition: 128MS/sec

The spectrum analysers are situated as shown in the testbed layout diagram; however, we can easily relocate the receive antenna of the spectrum analyser around the lab if needs be for a certain experiment. We also have in the testbed:

- Rohde & Schwarz SMU 200A - Vector Signal Generator

- Anritsu MS2781B - Signal Analyser

- Anritsu MS2721B - Spectrum Master (handheld spectrum analyser)

Probably the easiest way to acquire data from the Rohde & Schwarz spectrum analyser is using the testbed Windows node "Trinity-8170896" which is accessible via VNC through the ctvr-gateway node. This node runs Rohde & Schwarz IQWizard, a programme which allows simple acquisition of IQ data, in a range of formats. However, remote access directly to the spectrum analyser via VNC is also available.

Remote access via VNC

The spectrum analyser can be accessed from any of the testbed nodes or from the ctvr-gateway server. Information on obtaining access to these nodes can be found here.

* Verify that the analyser is switched on and connected to the network by pinging it using

ping ctvr-analyser.cs.tcd.ie

* Use a VNC client to connect to ctvr-analyser.cs.tcd.ie

Remote control and IQ acquisition using Matlab

It is also possible to perform remote control and IQ acquisition using Matlab.

| Attachment | Size |

|---|---|

| Generators and Analysers - one SigGen.jpg | 87.51 KB |

Test and Trial Ireland

In order to enable research into innovative new technologies, which would require transmission and reception testing within licensed bands, Test and Trial Ireland have the ability to make certain bands in the Irish wireless spectrum available for use. Test and Trial Ireland is a licensing programme which was launched by the Commission for Communications Regulation in Ireland (ComReg).

If the experimenter requires use of licensed bands further details on the programme, as well as information about how to apply for spectrum, are available at http://www.testandtrial.ie/.

Use of the testbed webcam

In order to view the testbed remotely and to enable experiments with live camera streaming a webcam has been added to the testbed on node05.

The easiest way to veiw the testbed using the webcam is by connecting to node05 via VNC and opening "Cheese Webcam Booth".

We can also reposition the camera if required for certain experiments.

LTE advanced documentation

This section contains basic information about Dresden´s LTE/LTE+ like testbed. Please use the links below to learn more about how the testbed can be used.

If you have any questions do not hesitate to contact us via crew@ifn.et.tu-dresden.de

Introduction

In this section the basic information about the testbed is presented. The possibilities and limitations of the setup are also described.

Basic information

TUD coordinates the test activities that are related with the EASY-C testbed. Cellular use cases and CR-related field trials are provided by the Dresden test bed, which is supervised by TUD.

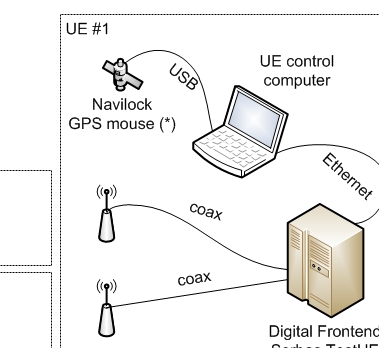

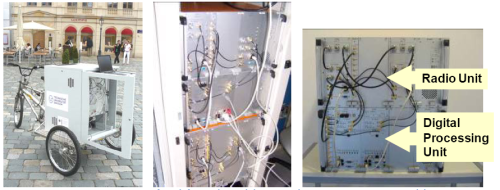

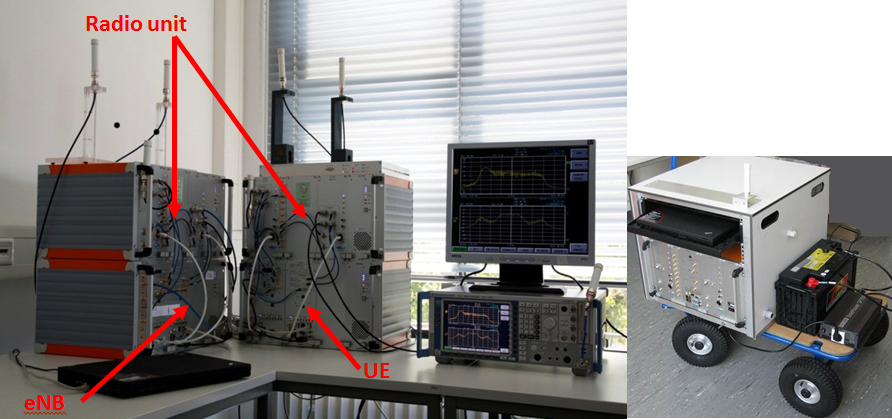

The TUD contribution is based on the EASY-C campus infrastructure, i.e, the EASY-C outdoor lab test bed which is directly operated by the Vodafone Chair research team. An LTE-like cellular infrastructure is used where relevant network parameters are measured such as frame error rates, outage events, throughput or latency. One base station at rooftop level will be used which serves multiple UEs. This BS resides at the faculty of electrical engineering and information technology, TUD. Stationary and mobile user equipment are used. Below are depicted, from left to right: mobile test UE, base station equipment, UE lab equipment.

EASY-C test equipment.

External users of the TUD test bed need to install their own equipment at the TUD test site. A predefined test setup is used which provides well defined and reproducible EASY-C LTE traffic – good for the CREW cognitive radio benchmarking initiative. The LTE network parameters are constantly monitored and recorded. The CR transceivers are then activated where the LTE performance parameters are compared for the non-CR and CR case. Hence, it will be possible to benchmark the impact of various CR schemes on a cellular infrastructure through a well-defined set of reproducible test setups.

Another possibility for external users is to connect via Remote Desktop to the TUD indoor test bed to perform experiments with a fixed setup of one eNB, one UE, National Instruments USRP 2920 and one Signalion HaLo device.

Please click on the thumbnail below to get an overview picture of the hardware available in LTE advanced testbed.

| Attachment | Size |

|---|---|

| p01.png | 179.31 KB |

| ltep03.png | 14.91 KB |

Usage of the testbed

Two experimentation setups are available: the indoor lab and the outdoor lab.

In order to conduct experiments in the LTE+ testbed, participants are required to bring their spectrum sensing and/or secondary system hardware to Dresden, if the experiment cannot be performed by a USRP or HaLo device. In order to pre-evaluate certain theories and algorithms, a testbed reference signal in the form of baseband I/Q samples can be provided. Further, during the experiments it is possible to dump transmitted and received signals in the same format. This allows for offline post-processing of the signals, evaluation of the signals and replay in other testbeds.

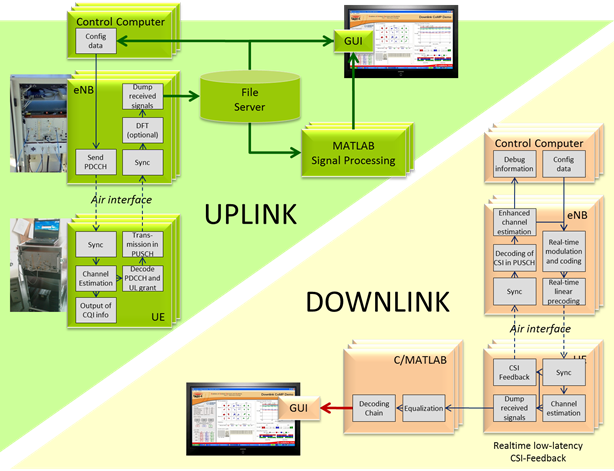

It is important to distinguish if a downlink (DL) or an uplink (UL) experiment is desired.

In uplink experiments, it is possible to serve up to 4 UEs. The UEs use 1 antenna for transmission, while the eNBs can receive with 1 or 2 antennas. The resolution for scheduling a transmission is 1 ms, which corresponds to 1 TTI (transmission time interval). Scheduling can be done for a total duration of several minutes. The number of occupied PRBs is either 10, 20, 30 or 40 (cf. Table 1). QPSK, 16QAM and 64QAM modulation are supported.

In downlink, up to 4 UEs and up to 4 eNBs can be used simultaneously. The eNBs can transmit with up to 2 antennas and the UEs can receive with up to 2 antennas, thus up to 2 streams per UE can be sent. Time resolution is 1 ms corresponding to 1 TTI (same as UL). The number of occupied PRBs can be 12, 24, 36 or 48 (cf. Table 2).

The evaluation of an experiment happens via dumps of the received signals at the UEs / eNBs. While in the UL, signal dumps can be recorded for all eNBs in synch, the dumping process needs to be initiated manually and out of synch in the DL.

The signal dumps contain the received time samples as well as additional control information. Further processing in Matlab allows derivation of indicators like SINR, BLE, etc. in semi-realtime/offline.

The performance evaluation of experiments can be performed in real-time as well as semi real-time and offline. Real-time measures include

- Received Signal Strength Indicator (RSSI),

- Reference Signal Receive Power (RSRP),

- Path loss, and

- Channel Quality Indicator (CQI; derived from SINR).

In semi real-time, additionally QAM constellations and block error rate (BLER) can be monitored via file dump of I/Q samples and Matlab post processing. Further performance measures could be obtained in offline processing from those file dumps.

Please click on the thumbnail below to get an overview picture of the usage overview in LTE advanced testbed.

Deviations from LTE release 8

As the eNB and UE provide only minimal LTE release 8 (Rel 8) PHY/MAC functionality, it is particularly important to note that the DL frame structure and control channels differ slightly. Differences include:

- PDCCH is always in the second OFDM-symbol (position is variable according to Rel. 8),

- PHICH (HARQ Indicator Channel) is not in the first OFDM symbol and has a different structure/content,

- PCFICH (Control Format Indicator Channel) is not supported, and

- PBCH (Broadcast Channel) is not supported.

Further, the OFDM scheme is used in the uplink. Also, 5 MHz and 10 MHz mode are not supported, thus the testbed operates in 20 MHz mode only.

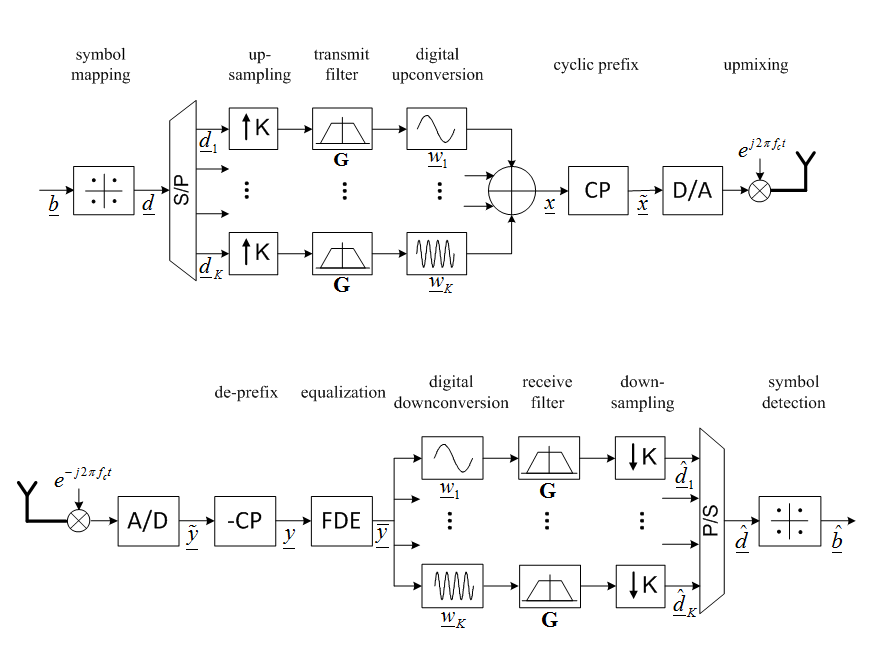

An overview of the uplink and downlink processing chains can be seen below:

Uplink and Downlink Processing Chain

| Attachment | Size |

|---|---|

| ltep04.png | 164.57 KB |

Getting started with the experiments

If you are new to our testbed, first go through the instructions and see what needs to be done before, during and after the experiment. To get more familiar with the testbed, we recommend you to go through the basic tutorial. After you have understood that, you might want get to know the full possibilities of the testbed, and you can proceed to advanced tutorial.

Instructions for external participants

Before the experiment:

- Contact the testbed staff, make sure the hardware is compatible (frequencies) and the testbed supports all features necessary for the intended experiment

- If necessary for the experiment and after the inital contact with the research staff: Get an account to access the network and computers. Remote desktop access over internet is also possible.

- Make sure there will be enough testbed hardware available (indoor/outdoor?)

- If reasonable, ask for reference signal files to check compatibility with external hardware

- Get familiar using the spectrum analyzer R&S FSQ

- Prepare UE/eNB configuration files or ask testbed staff to do it

During the experiment:

- Carefully check the setup

- Use a terminator when there is no antenna/cable plugged

- Check if all cables are ok

- Make sure you are using the latest version of the config tool to configure the hardware

- Keep a record of the config files you use as you are changing parameters

- Check the signal on the spectrum analyzer, several things can be validated that way

- If in nothing else seems to work reboot and reconfigure the UE/eNB prototype hardware

- When in doubt, ask the testbed staff

After the experiment:

- Put everything back where you took it from

Example experiment

Detection of occupied frequency bands is the foundation for applications of dynamic spectrum access (DSA). In order to convince network operators that DSA is feasible in cellular frequencies, it has to be shown that a reliable detection of their primary signals is possible. In this section, we present an experimental validation of an algorithm and hardware, which can detect the presence of a Long Term Evolution (LTE) signal. In contrast to the classical mono antenna approach, an array of antennas is used, which allows to enhance the detection capabilities, particularly when besides the useful signal there is also interference.

Objectives of experiments

- Reliable sensing in real environment

- Performance gain of multi-antenna vs mono-antenna

- Effectiveness of primary and secondary synchonisation criteria

- Parametrization of sensing algorithm (detection threshhold)

Setup

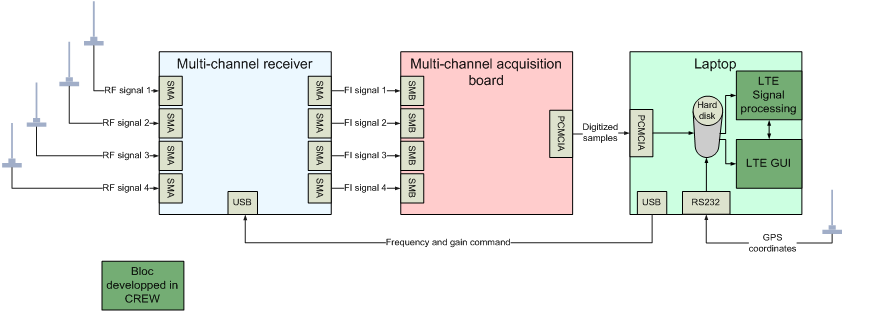

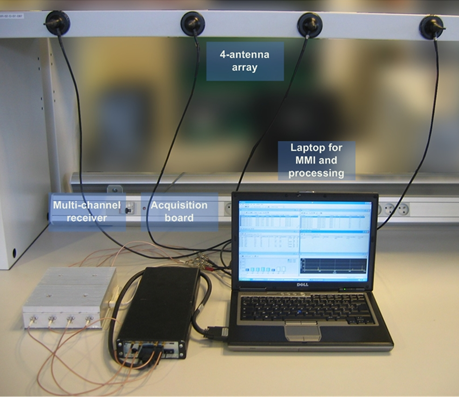

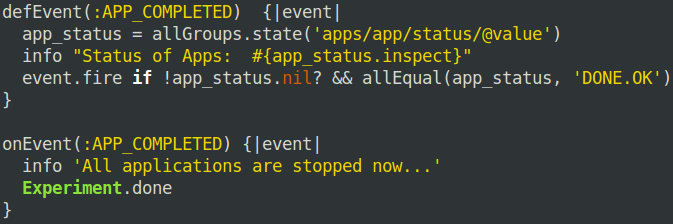

The multi-antenna LTE sensing platform allows to acquire LTE (I, Q) data and to process them using advanced antenna processing algorithms. As described in the pictures below, the multi-antenna demonstrator is made of:

- A set of 5 antennas,

- A 4-channel receiver,

- A 4-channel acquisition board,

- A GPS system for positioning,

- A laptop for data storage and off-line multi-antenna signal processing.

Multi-antenna sensing platform block diagram

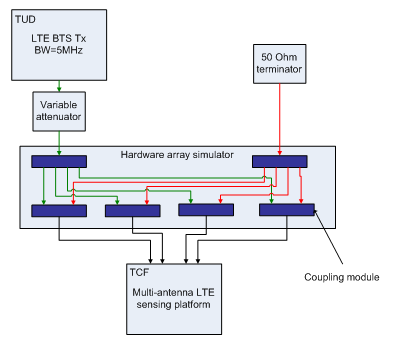

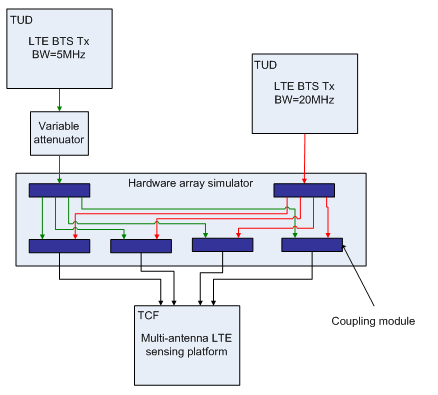

Filtering and gain control are applied to the signal in the multi-channel receiver unit, the multichannel acquisition board is used to convert the signals to digital domain and a control computer handles processing and evaluation of the digital samples. The multi-antenna LTE sensing platform is validated with lab tests by measuring sensitivity and co-channel interference rejection with real LTE eNBs. A hardware array simulator consisting of splitters, coupling modules and a set of cables of particular lengths is employed to virtually create a multiantenna, mono-path propagation channel with two directions of arrival.

Multi-antenna sensing platform

The main characteristics of the multi-channel receiver are: frequency bands - 1920-1980 MHz / 2110-2170 MHz); bandwidth - 5 MHz; Output intermediate frequency - 19.2 MHz; Noise factor (at maximal gain) <7 dB; Rx gain - 0 to 30dB (1 dB step); Frequency step - 200 kHz; number of Rx channels – 4; Gain dispersion<1 dB; Phase dispersion<6°; Frequency stability <10-7; Selectivity at ±5 MHz >50dB.

The main characteristics of the multi-channel acquisition are: Resolution - 12 bit; internal quartz clock - 15.36 MHz; Number of channels - 4; -3 dB bandwidth >25 MHz; Memory - 8MSamples (i.e. 2MSamples per channel).

Sensitivity tests

For sensitivity measurements, the platform depicted below is used. The level of the BTS is gradually lowered in order to estimate the sensitivity level when using one or four channels for detection processing.

Lab test platform for sensitivity measurements

Interference rejection tests

For interference rejection measurements, the platform described in the picture below is used. The second BTS is considered as the interfering BTS in the following. Its bandwidth was set to 20MHz in order to be able to highly load the OFDM sent symbols. The level of the first BTS is gradually lowered while the level of the second one does not change. The 80% detection limit level is searched when using one or four channels for detection processing.

Lab test platform for interference rejection measurements

Results

LTE detection sensitivity level for an 80% detection rate

|

|

1 antenna |

4 antennas |

Multi-antenna gain |

|

Sensitivity level |

-121 dBm |

-129 dBm |

8 dB |

The LTE detection sensitivity performance is summarized in the table above. It is slightly higher to what can be expected (6 dB with 4 antennas). This might be due to a lower sensitivity of the first channel compared to the other three.

LTE rejection capacity for an 80% detection rate

|

|

1 antenna |

4 antennas |

Multi-antenna gain |

|

Sensitivity level of the first BTS |

-92 dBm |

-124 dBm |

32 dB |

|

Rejection capacity of the second BTS |

11 dB |

43 dB |

32 dB |

We can see that, with four antennas, the rejection gain is equal to 32 dB.

For further details refer to: Nicola Michailow, David Depierre and Gerhard Fettweis: “Multi-Antenna Detection for Dynamic Spectrum Access: A Proof of Concept”, QoSMOS Workshop at IEICE 2012

https://mns.ifn.et.tu-dresden.de/Lists/nPublications/Attachments/895/main.pdf

| Attachment | Size |

|---|---|

| ltep12.png | 33.27 KB |

| ltep13.png | 253.64 KB |

| ltep14.png | 14.14 KB |

| ltep15.png | 16.03 KB |

Basic tutorial

This tutorial explains how to set up a basic transmission.

Download this Zip archive with all necessary default configuration files.

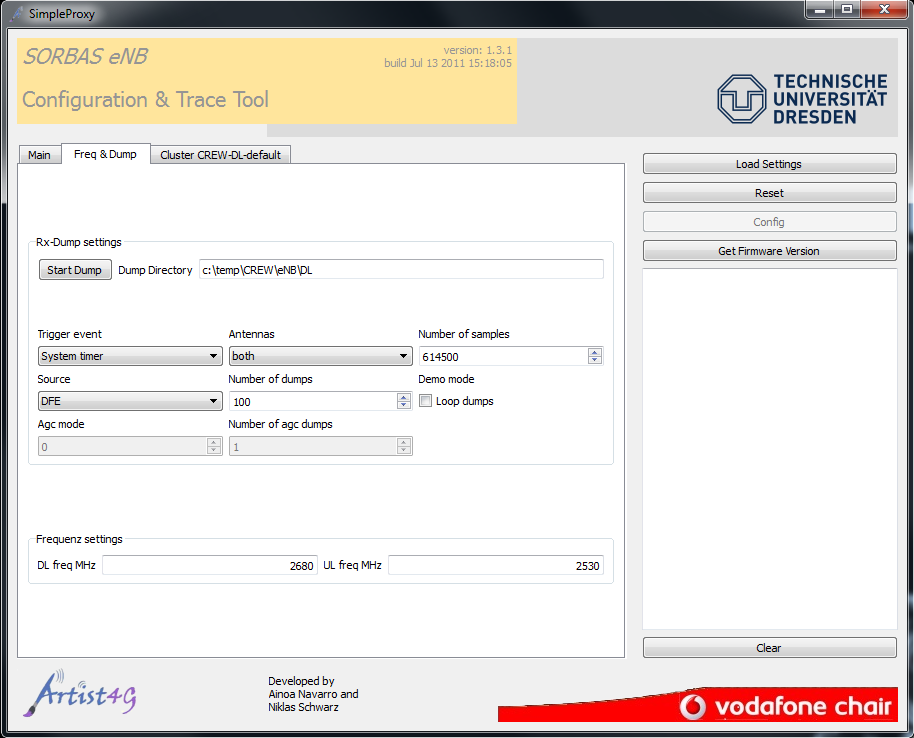

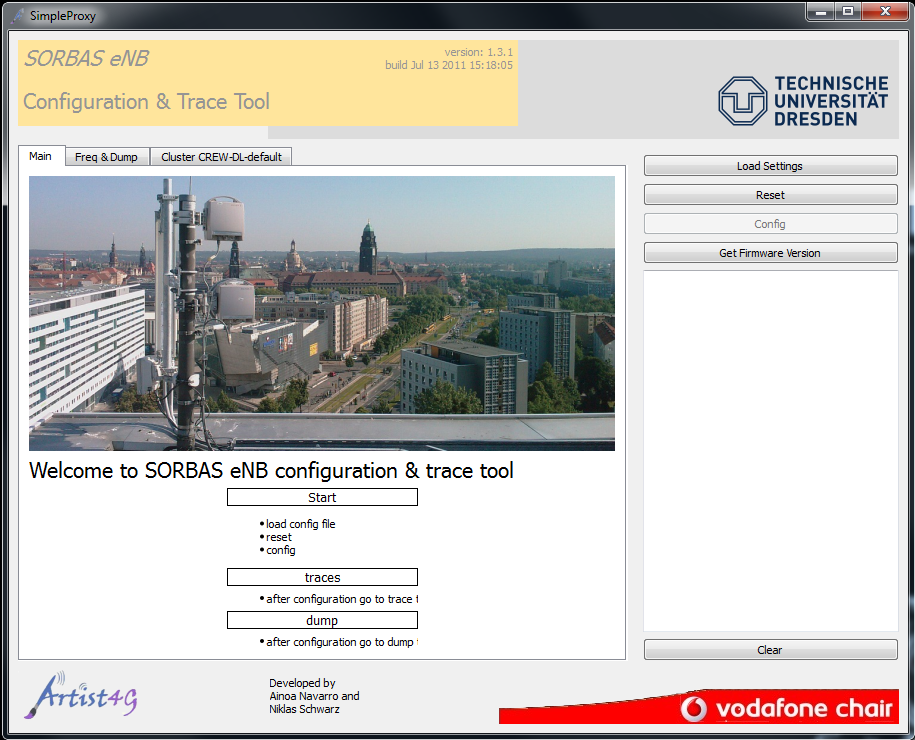

Setup the eNB

- Power the hardware

- Sorbas eNB Simulator

- Radio Unit

- eNB control computer

- Configure the eNB

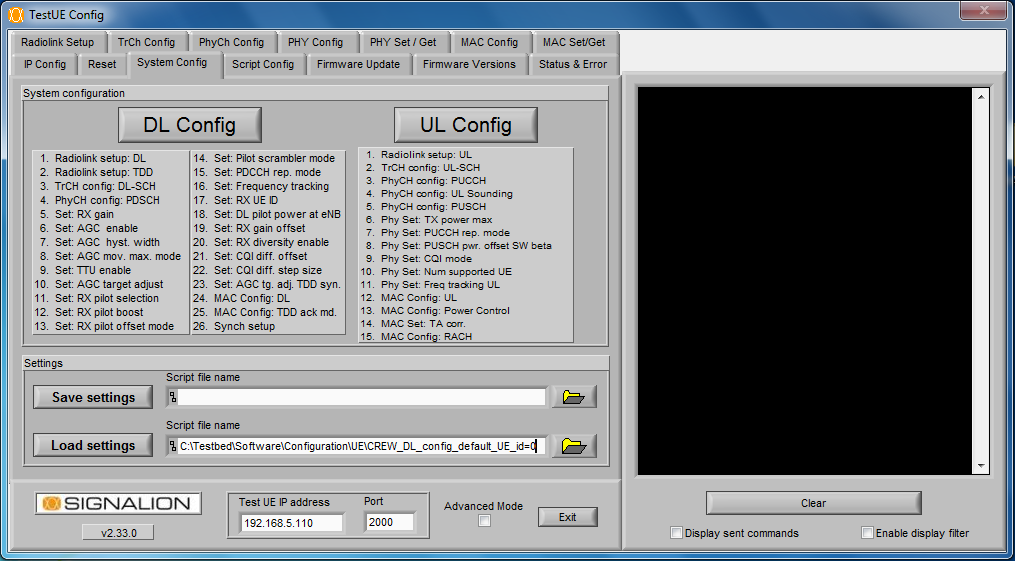

- Open the SimpleProxy 1.3.1 tool

- Click Load Settings and select CREW_DL_config_default_1eNB_2UEs.xml or CREW_UL_config_default_1eNB_2UEs.xml

- Click Reset to reset the eNB

- Wait for eNB broadcast message to appear in the logging box below

- Click Config to send the configuration to the eNB

- Check logging box for errors

- Open the SimpleProxy 1.3.1 tool

Setup the UE

- Power the hardware

- Sorbas Test UE

- Radio Unit

- UE control computer

- Configure the UE

- Trace

The system is now running. Check spectrum on R&S FSQ.

Record IQ data dumps

Advanced tutorial

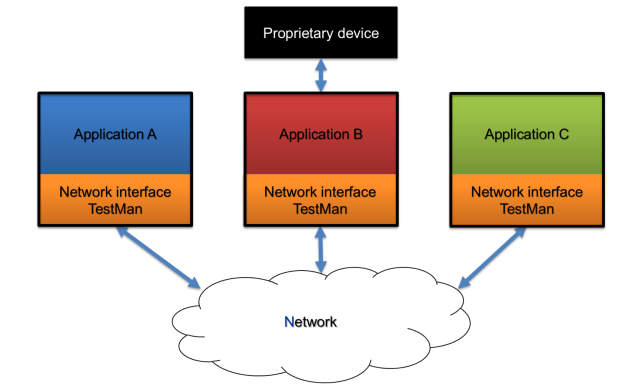

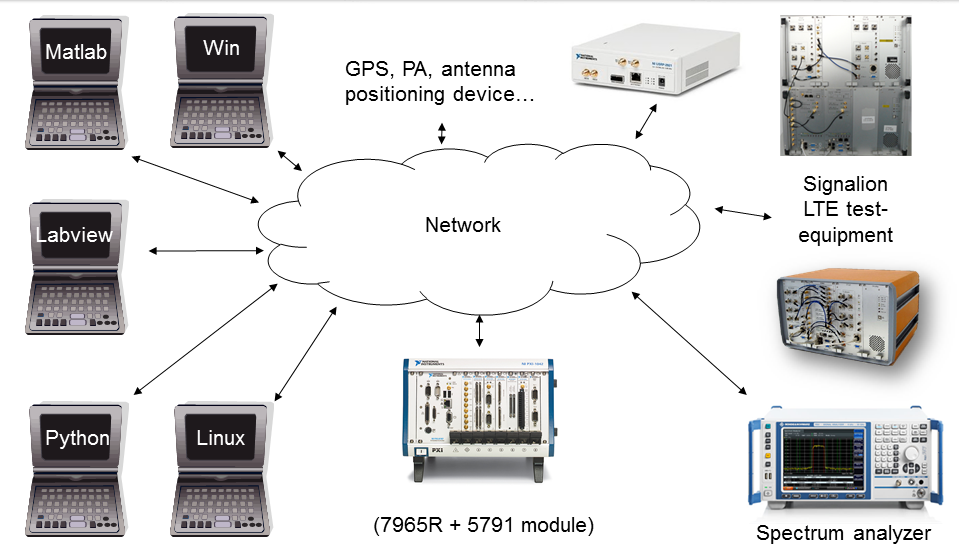

Besides the manual, gui-based control for test bed related programs and devices, script controlled measurements are also possible. This approach developed at the Vodafone chair is called TestMan. The basic idea is to provide a common interface to exchange data, commands and status messages between different application, running on the same or distributed systems and written in different languages.

TestMan is based on the Microsofts .NET framework, which similar to java, is platform independent. So even a Linux computer can make use of .NET programs if the Mono project is used. In three important languages Matlab, Labview and Python it is possible to use dynamic link libraries (DLL). Therefore TestMan is a DLL which can be loaded into the specific application or script and it makes sure that data is transferred over the network from one Application to another, respectively to a group of applications.

TestMan uses two different network techniques to exchange information’s: For SNMP like status messages and commands UDP multicast is used whereas TCP peer to peer connection comes into place for transferring bigger data.

To distinguish between different applications a type and an ID are introduced for every application. So it is possible to group similar applications together and the TestMan DLL filters out messages which are intended for other applications.

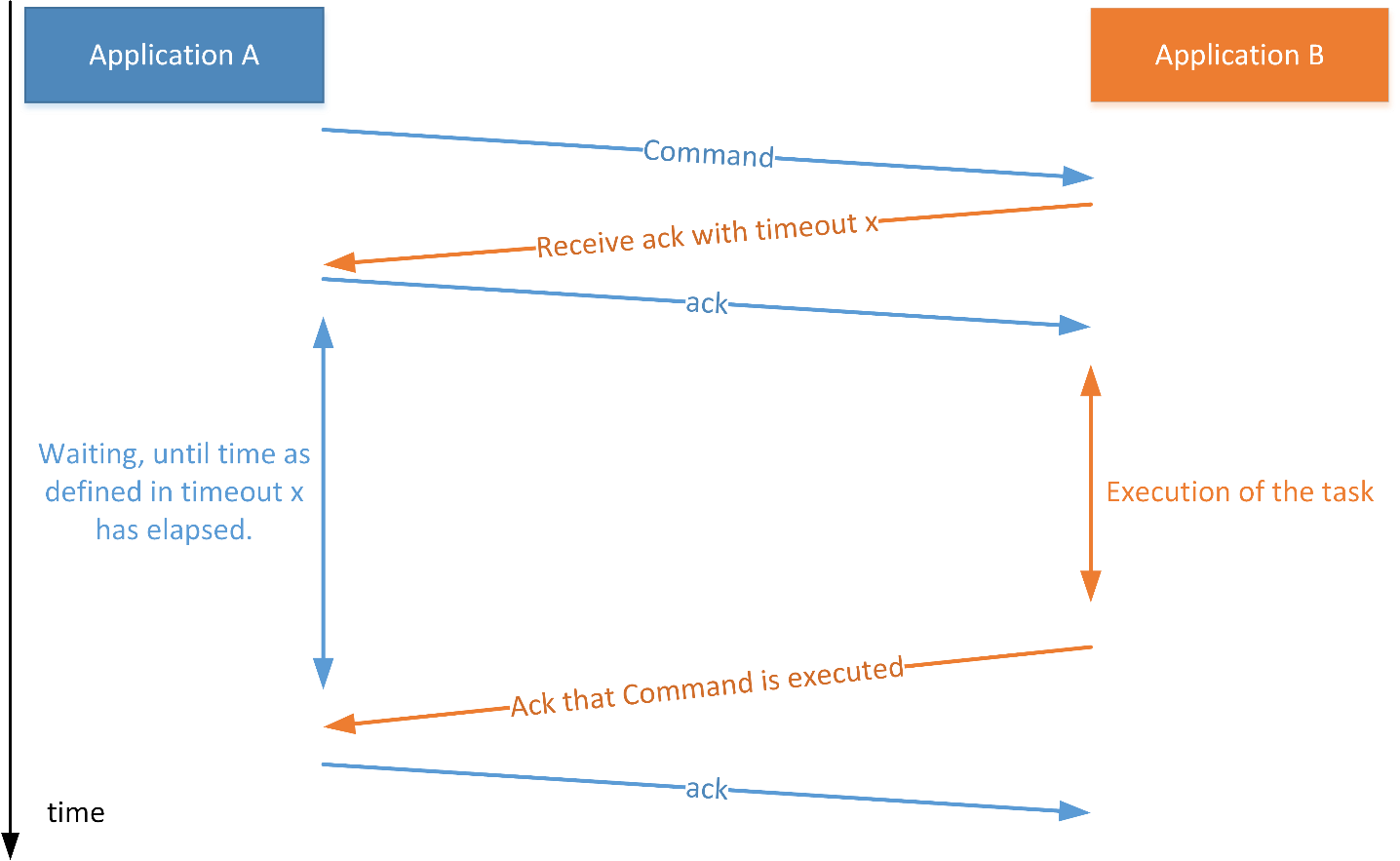

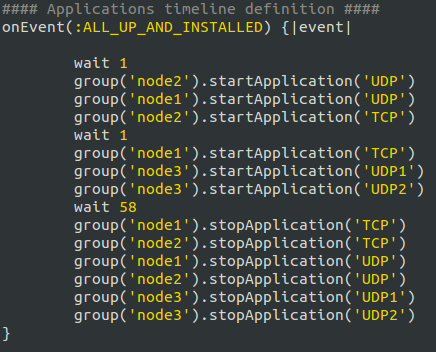

UDP packets can be thrown away by network elements like routers and switches. For a status messages this is not always a problem, but definitely when a command is send. To mitigate this problem TestMan uses 4-way handshaking for commands as stated in the following figure.

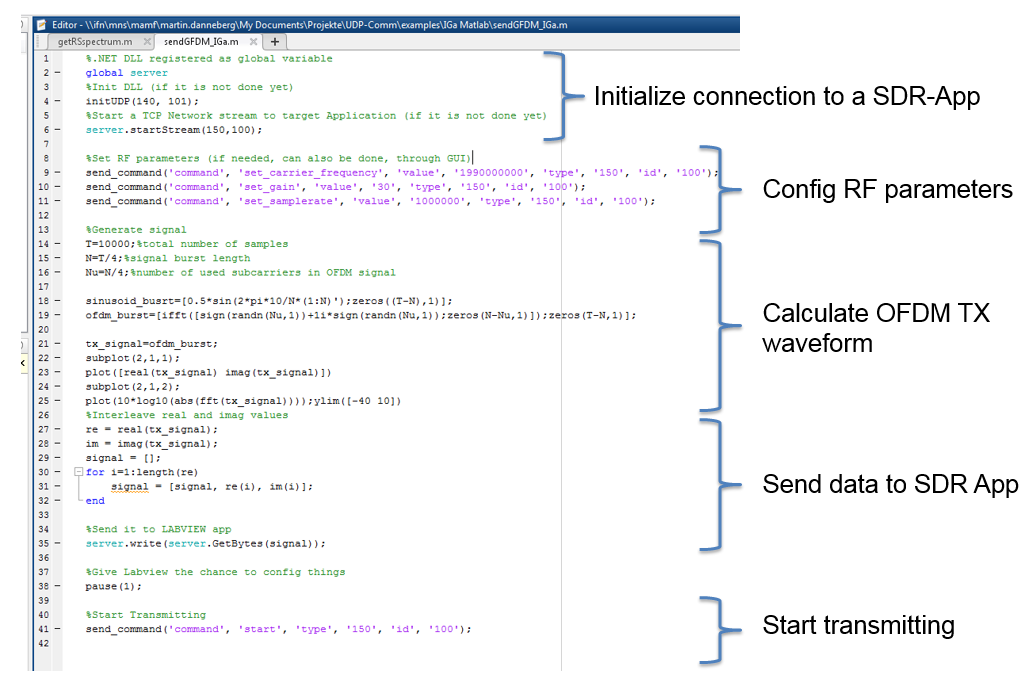

The following picture shows an example how an OFDM-Transmitter can be implemented using TestMan.

Are more detailed example code will be published soon.

| Attachment | Size |

|---|---|

| TestMan_Commands.png | 83.12 KB |

| matlab-example.png | 180.36 KB |

| TestMan_Devices.png | 243.01 KB |

| TestMan_Overview.png | 41.9 KB |

Hardware

In this section you can find detailed description of the hardware used in the testbed.

Configuration

Dresden’s LTE/LTE+ like testbed was set up in 2008 as part of the Easy-C project (www.easy-c.com).

The signal processing hardware includes:

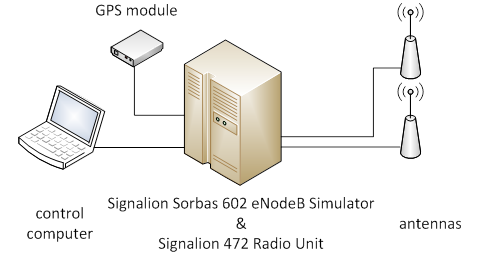

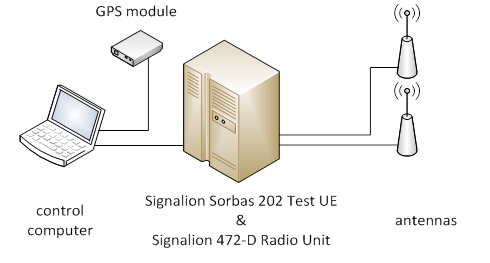

- Sorbas602 eNodeB Simulators with ZF Interface to a Sorbas Radio Unit ("ZF Interconnect”)

- Sorbas202 Test UEs with ZF Interface to a Sorbas Radio Unit ("ZF Interconnect”)

- Sorbas472 Radio Units, by Signalion: EUTRAN band VII (2.5 - 2.57 GHz and 2.62 - 2.69 GHz) as well as close to band I (1.98-2 GHz and 2.17-2.19 GHz), 20MHz bandwidth, Tx power approx. 15dBm (indoor) and approx. 30dBm (outdoor), supports up to two Tx and two Rx channels.

They were supplied by Signalion (www.signalion.com). The eNBs and UEs are connected through IF interconnects at 70MHz with the radio unit frontend. The hardware supports up to two Tx and two Rx channels for MIMO capability. The testbed operates in EUTRAN band VII (DL @ 2670-2690 MHz / UL @ 2550-2570 MHz) with fixed bandwidth of 20MHz and in FDD mode.

The LTE testbed at TUD has been upgraded with a new spectrum license in the 2.1 GHz band (1980 MHz to 2000 MHz and 2170 MHz to 2190 MHz). This step was necessary to ensure the continuous operation of the LTE testbed when the spectrum license for 2.6 GHz is withdrawn due to commercial use of the corresponding frequencies in Germany. Along with the license, several nodes have been equipped with 2.1 GHz frontends. Note that only the RF parts have been replaced, while LTE eNB and UE baseband processing remains unaffected due to the modular structure of the equipment. Further note that as long as the 2.6 GHz license is not withdrawn, those frequencies are still available for experimentation.

The operation of the new equipment has been successfully tested. The internal US5 experiment “LTE Multi-Antenna Sensing” has been conducted in the 2.1 GHz frequency range.

Base station (eNB) and mobile terminal (UE) nodes each are connected to a host PC and configured with text files in XML format. The host computer also manages measurements of the received signals and stores them in dumps. At the eNBs, a GPS unit is used for synchronization, while the UEs employ GPS for position tracking. Additionally, UEs can be powered by a mobile power supply if necessary.

Configuration of a baste station node (eNB)

Configuration of a mobile terminal node (UE)

All UEs in the testbed, as well as the indoor eNBs are equipped with Kathrein 800 10431 omnidirectional antennas. The antennas of the outdoor eNBs are sectorized and of type Kathrein 800 10551. You can find detailed information about these antennas here:

http://www.kathrein-scala.com/catalog/80010431.pdf

http://www.kathrein.de/en/mcs/catalogues/download/99811214.pdf

Other testbed equipment includes six batteries that can power an individual UE for around 2-4 hours, GPS receivers for time synchronization, various cables, attenuators and splitters. Measurement equipment includes spectrum analyzers Rohde & Schwarz FSH4, Rohde & Schwarz FSQ8 and Rohde & Schwarz TSMW. For more details click on following links:

R&S FSH4 data sheet:

http://www.test-italy.com/occasioni/2013/rs_fsh8-18/FSH_dat-sw_en.pdf

R&S FSQ8 data sheet:

R&S TSMW operating manual:

http://www.rohde-schwarz.de/file_9446/TSMW_Operating_Manual.pdf

R&S TSMW software manual:

http://www.rohde-schwarz.de/file_11255/TSMW_Interface_and_Programming_Manual.pdf

| Attachment | Size |

|---|---|

| ltep05.png | 29.64 KB |

| ltep06.png | 28.13 KB |

Indoor and outdoor setups

For the CREW project, two experimentation setups are available.

The indoor lab features 5 eNBs and 4 UEs. While the hardware itself is stationary, the Tx and Rx antennas can be positioned anywhere in the lab room. Further, four additional UEs are mounted on studio racks/carts and can be moved within the building. The approximate transmit power is 15 dBm.

The outdoor lab consists of two base station sectors that are fixed on two opposing corners of the faculty building, approximately 150 m apart. In addition to the mobile indoor UEs from setup 1, three rickshaw UEs are available for outdoor experiments in the vicinity of the building. There are also 6 batteries which can supply an UE for around 2-4 hours. The transmit power is approximately 30 dBm.

Outdoor setup

Indoor setup

| Attachment | Size |

|---|---|

| ltep07.png | 918.06 KB |

| ltep08.png | 494.97 KB |

Secondary System

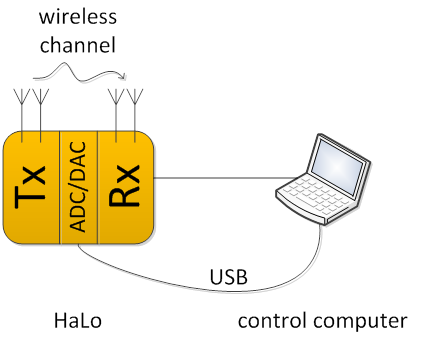

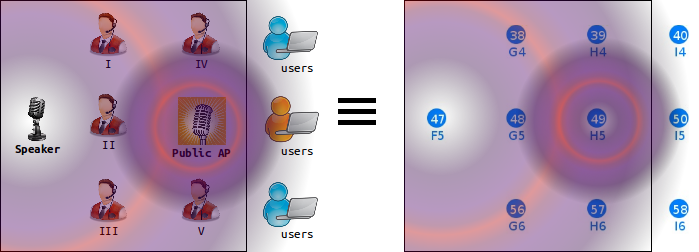

The Signalion Hardware-in-the-Loop (HaLo) is a platform designated to simplifying the transition from simulation to implementation. To support cognitive radio setups that consider a primary/secondary user configuration, the LTE testbed has been extended by a HaLo node that can take the role of the secondary user. On the HaLo device, a novel modulation scheme called Generalized Frequency Division Multiplexing (GFDM) is now available.

This enables experimenters to consider experiment setups in LTE testbed, where the LTE system can act as a monitored primary system, while the GFDM system can run as an interfering secondary system.

HaLo Concept

The HaLo consists of a wireless transceiver that can operate in the 2.6 GHz frequency band. The concept is such, that complex valued data samples are transmitted to the device’s memory via USB from a control computer. The samples can be either read from a previously recorded file or generated on the fly e.g. by a Matlab script. The signal is transmitted over the air and received in a similar way. The device digitizes the signal and stores complex valued samples to an internal memory before they are fed back via USB to the control computer.

The HaLo setup

Note that due to limitations in the HaLo’s internal memory real-time operation is not possible.

GFDM Theory

The transmission scheme chosen to be implemented on the HaLo device to act as a secondary system in the testbed is a novel, non-orthogonal and flexible modulation scheme called GFDM. The concept is such that a multicarrier signal is transmitted, quite similar to the well know and established OFDM scheme, however one of the differences is in the pulse shaping of the individual subcarriers. This step allows shaping of transmissions and produces a signal with particularly low out-of-band radiation, which is a very desirable property in cognitive radio. For further details please refer to:

https://mns.ifn.et.tu-dresden.de/Lists/nPublications/Attachments/826/Michailov_N_VTCfall12.pdf

GFDM transmitter and receiver block diagram

| Attachment | Size |

|---|---|

| ltep09.png | 26.04 KB |

| ltep10.png | 51.37 KB |

Tools

SimpleProxy 1.3.1

This tool is installed on all eNB control computers and is used to connect to an eNB, load a configuration and dump IQ data at eNB.

TestUE Config

This tool is installed on all UE control computers and is used to configure an UE.

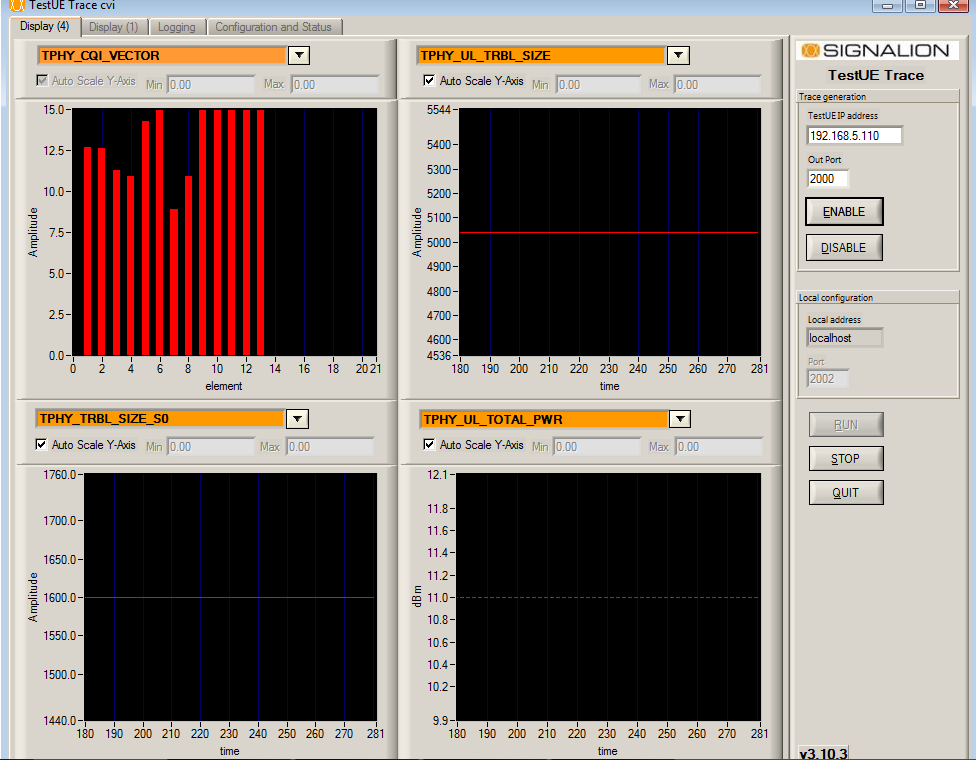

Test UE Trace

This tool is installed on all UE control computers and is used to monitor UE activity in real-time

UE_dump_tool

This tool is installed on all UE control computers and is used to record the UE's IQ data dumps.

GFDM chain

This tool is used to generate a secondary user signal and control the HaLo node.

FAQ

TWIST documentation

Browse the sections below for information about the TWIST testbed.

!!! THIS INFORMATION MIGHT BE OUTDATED !!!

Updated documentation can be found at https://www.twist.tu-berlin.de/

Introduction and overview of capabilities

TKN Wireless Indoor Sensor network Testbed (TWIST)

The TKN Wireless Indoor Sensor network Testbed (TWIST), developed by the Telecommunication Networks Group (TKN) at the Technische Universität Berlin, is a scalable and flexible testbed architecture for experimenting with wireless sensor network applications in an indoor setting. The TWIST instance deployed at the TKN group includes 204 sensor nodes and spans three floors of the FT building on the TU Berlin campus, resulting in more than 1500 square meters of instrumented office space. TWIST can be used locally or remotely via a webinterface.

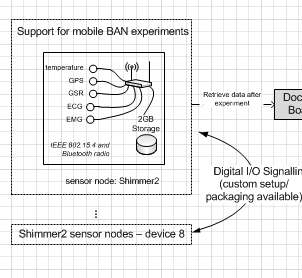

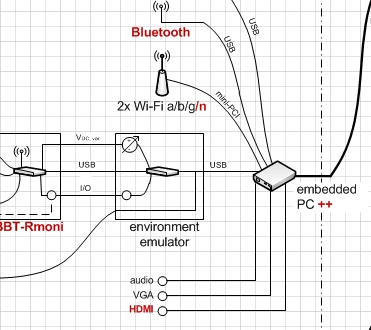

Additonal components

In addition to TWIST, which is a fixed testbed infrastructure, CREW experiments involving mobility can be carried out in the TKN premises using additional equipment. The use of this equipment requires additional support at the TKN premises. This can be achieved either by experimenters beeing present at the premisises or by additional support from TWIST staff.

The additional components are:

- 2 mobile robots: Turtlebot-II based on Kobuki mobile base and a Microsoft Kinect 3D sensor. The robot runs ROS (an open-source, meta-operating system) and it can be programmed to follow certain trajectories in the TWIST building. Shimmer2 sensor nodes or WiSpy devices (see below) can be mounted on the robot, e.g. to record RF environmental maps, or perform experiments emulating body area networks (BANs) as well as experiments involving interaction between a mobile network and the fixed TWIST infrastructure.

- 8 Shimmer2 nodes, which are wearable sensor nodes similar to the popular TelsoB platform and can be attached to a person (or robot).

- 10 WiSpy 2.4x USB Spectrum Analyzers, which are low-cost devices to scan RF noise in the 2.4 GHz ISM band.

-

3 ALIX2D2 embedded PCs equipped with Broadcom WL5011S 802.11b/g cards.

Getting started: tutorials

Below you find information on how to get started using the TWIST testbed. Most steps involve remote access via the TWIST web interface, but there is also a more advanced tutorial on how to control TWIST via the cURL command line tool.

Requesting a user account

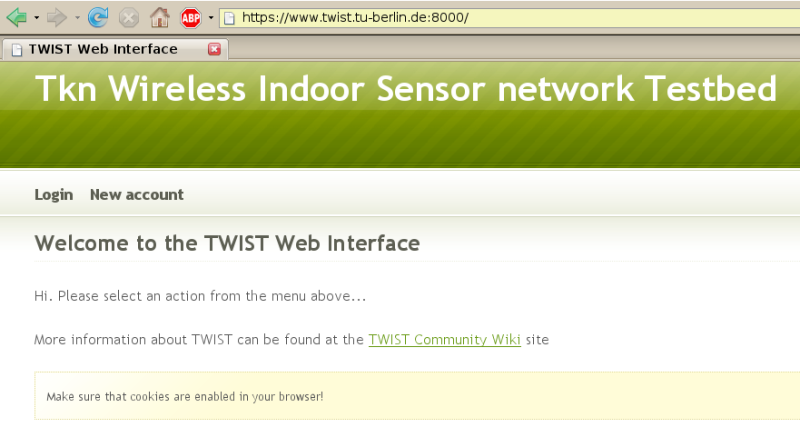

To access the TKN instance of the TWIST web interface you need to have registered an account. If you are not yet registered, go to the TWIST web interface where you should see the following welcome page:

Make sure that your browser has cookies enabled and click on "New account". In the form fill in your name, email address and choose a username (at least 6 characters) and a password. Make sure you confirm the password and answer the spam control question. Then press the "Request" button; if you filled in the form correctly you will see a new page saying "Successful account request". Now go to the TWIST terms of use page. Copy and paste the content of this page into an email, add the requested information (the nature of the intended experiments, etc.) and send this email to the TWIST administrator (email address is given on the same webpage). Please also make sure that you explain your relationship to the CREW project. The last step in obtaining an account is in the responsibility of the administrator, and you will be notified by email when your account has been activated. If there are any problems, please contact Mikolaj Chwalisz.

Make sure that your browser has cookies enabled and click on "New account". In the form fill in your name, email address and choose a username (at least 6 characters) and a password. Make sure you confirm the password and answer the spam control question. Then press the "Request" button; if you filled in the form correctly you will see a new page saying "Successful account request". Now go to the TWIST terms of use page. Copy and paste the content of this page into an email, add the requested information (the nature of the intended experiments, etc.) and send this email to the TWIST administrator (email address is given on the same webpage). Please also make sure that you explain your relationship to the CREW project. The last step in obtaining an account is in the responsibility of the administrator, and you will be notified by email when your account has been activated. If there are any problems, please contact Mikolaj Chwalisz.

| Attachment | Size |

|---|---|

| TWIST_login_screen.png | 96.54 KB |

Running a simple experiment

Installing a node image

In this section we install the TinyOS 2 Oscilloscope application on a set of Tmote Sky nodes in the TKN TWIST testbed. The Oscilloscope application is described in the TinyOS 2 tutorial 5. After you have compiled the application with make telosb open a web browser and access the TWIST web interface. Press the "Login" button, enter your username and password and then click on "Sign in". If you have not yet registered a TWIST user account take a look at this tutorial page.

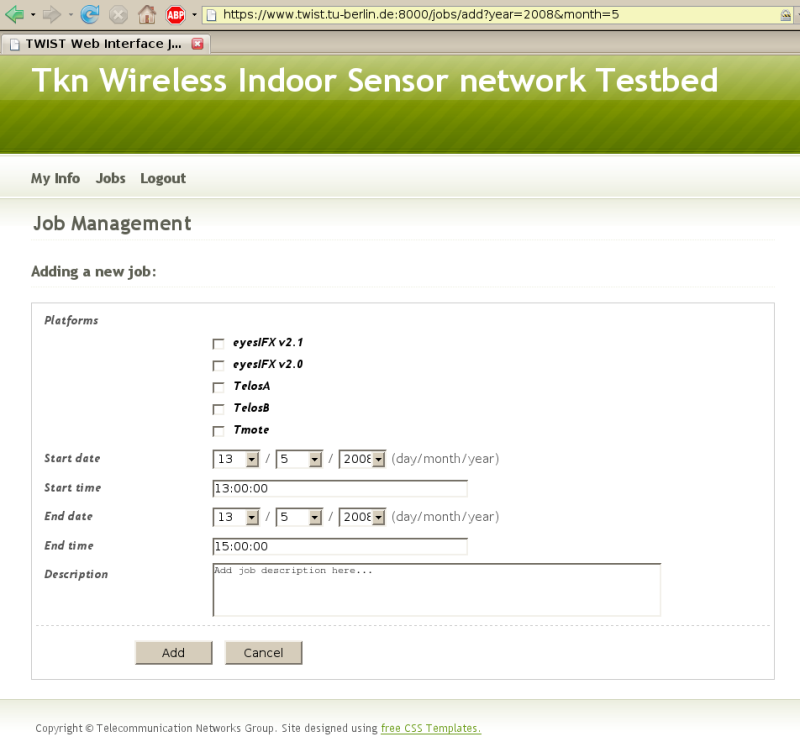

You will see a welcome page where you have three options: manage and update your account settings ("My Info"), schedule and control jobs in the testbed ("Jobs") or logout ("Logout"). Click on "Jobs" and you will see a list of scheduled jobs, i.e. the currently active jobs as well as pending future jobs. Take a close look at the list and find a time period for which Tmote/TelosB nodes are not reserved by someone else. Then click on "Add" and you will see the Job Management page as follows:

Under "Platforms" select Tmote; then choose a "Start/End date" and "Start/End time" such that the time interval is not overlapping with other jobs, which you checked in the previous step. You cannot make a real mistake here, because the system will automatically check for and not permit jobs that are overlapping in time if they use the same mote platform. However, different platforms (eyesIFX vs. Tmote) may be used concurrently. In the field "Description" enter a short note on what you plan to do in your job, such as "Testing the T2 Oscilloscope application", then click on "Add". If the time interval that you entered was accepted you will be taken back to the list of scheduled jobs, otherwise you get an error message and need to adapt the values.

The list of "Scheduled jobs" should now include your job. Your entry is likely to have gray background colour, meaning that it is registered but not yet active. The current system time is always shown in the upper right corner of the page and once your job becomes active -- its start time is shown in the column "Start" -- the background colour of your entry will turn yellow (you need to click the reload button of your browser).

When your job is active apply a tick mark at the left side of the entry and press the "Control" button at the bottom (the "Edit" button would be used to change the time of your job and with the "Delete" button you can remove your job).

Hint: When your job is active (during a experiment) you can still extend its "End time" by clicking on "Edit" on the "Jobs" page, provided that the new "End time" does not overlap with other registered jobs.

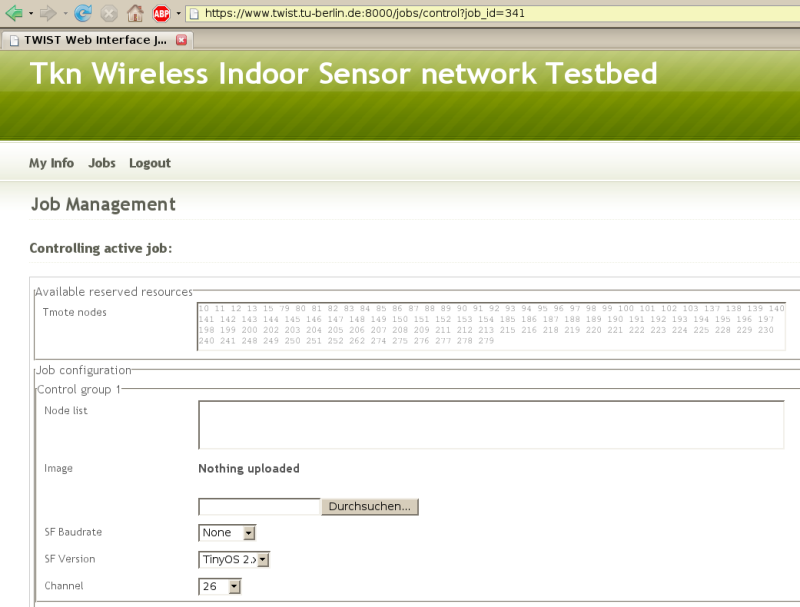

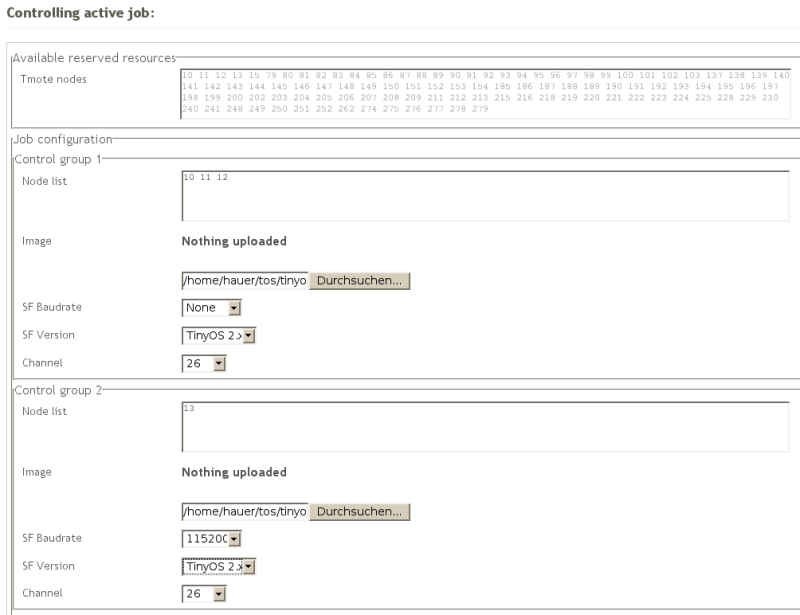

After you have clicked the "Control" button you will see the page for controlling your active job as shown in this figure:

This page is divided into the list of Tmote node IDs available in the testbed ("Available reserved resources"), a section for submitting up to three different program images to be programmed on a subset of the nodes ("Job configuration") and a set of buttons (on the bottom, not shown in Figure 3) to perform some actions, such as installing the image(s) on the nodes.

For the TinyOS 2 Oscilloscope application we want to install the Oscilloscope program image on some Tmote nodes, and one node will need to act as gateway and will be programmed with the TinyOS 2 BaseStation application (see TinyOS 2 tutorial 5). Because we will install two different application images, in the "Job configuration" field we will use two of the three "Control group" sections: the "Control group 1" section for the Oscilloscope application and the "Control group 2" section for the BaseStation application.

In the "Control group 1" section, enter in the "Node list" field a whitespace-separated list of the node IDs on which the the Oscilloscope is to be programmed, let's say 10 11 12. For convenience you can copy & paste from the list of IDs shown on top in the "Available reserved resources" list.

Then click on the "browse..." button next to the "Image" field just below the "Node list" field. Select the Oscilloscope image, which is the main.exe in your local tinyos-2.x/apps/Oscilloscope/build/telosb (you must have compiled the Oscillocope application with "make telosb" before). The "SF Baudrate" and "SF Version" fields control whether a SerialForwarder will be started for all nodes in the respective "Node list". Since we only need a SerialForwarder for the BaseStation application, we don't change the values (leaving it "None", "TinyOS 2.x"). Finally, "Channel" is the IEEE 802.15.4 channel to be used by the Tmote Sky radio CC2420 (if you change the channel for the Oscilloscope application, make sure that you do the same for the BaseStation application). In fact, the value of the CC2420_DEF_CHANNEL symbol inside your progam image will be replaced by the value of the "channel" field and thus, if your application includes the TinyOS 2 CC2420 radio stack, you can still modify the default radio channel after you have compiled the image.

Hint: The node ID is another symbol that is modified for each node individually before programming the image. It is accessible via TOS_NODE_ID in a TinyOS application.

We use the "Control group 2" section for installing the BaseStation program image on another node. In the "Node list" field enter 13 (or whichever node ID you want to use for the BaseStation application) and under "Image" click "browse..." and select the main.exe from your local tinyos-2.x/apps/BaseStation/build/telosb folder (you must have compiled the BaseStation application with "make telosb" before). Because we want to later establish a serial connection to the BaseStation node, select the pull-down menu under the "SF Baudrate" field and choose a serial baudrate. Whenever this field has a value other than None a SerialForwarder will be started for all nodes in the respective "Node list". The default baud rate for the "TelosA", ",TelosB" and "Tmote" platforms is 115200 baud.

Hint: You can change the baud rate for a telos node by modifying tinyos-2.x/tos/platforms/telosa/TelosSerialP.nc (this file is included by telosa, telosb and tmote platform). Make sure you recompile your application after changing the file.

The "SF Version" field defines the version of the Serial Forwarder protocol. Because we are using a TinyOS 2 applications select "2" (for a TinyOS 1 application you would select "1"). If the "SF Baudrate" field is None then the "SF Version" is ignored. Finally, make sure you select the same "Channel" as the one for the Oscilloscope application. Your configuration should now look like the one shown the next figure:

To actually program the images on the nodes scroll down, press the "Install" button and wait. After not much longer than 1 minute you should see a page with the "Execution log". Check for possible errors (any line "Could not find symbol [...] ignoring symbol" is only telling you that the respective symbol was not found/changed in the application image) and scroll down to the bottom where you can find a summary of the "Install" operation. Here you can also see that a SerialForwarder has been started for node 13:

To forward SF e.g. for node 13 use: ssh -nNxTL 9013:localhost:9013 twistextern@www.twist.tu-berlin.de

In the next section we will establish an ssh tunnel to the TWIST server and connect to the SerialForwarder of the BaseStation node. The remainder of this section summarizes the fields and options for controlling an active job over the web interface.

The following table describes the fields in the "Job configuration" section:

Field Meaning Node list Whitespace separated list of node IDs on which the image will be programmed Image The image to be programmed on the nodes in the "Node list" SF Baudrate Whether a SerialForwarder is started for each of the nodes in "Node list"

and what baudrate it will useSF version The version of the SerialForwarder: use 1 for TinyOS 1.x and 2 for TinyOS 2.x Channel The CC2420 radio channel

The following table describes the buttons on the bottom of the "Controlling active job" page:

Button Meaning Install Installs the image(s) on the node(s) specified in the above "Job Configuration"

section; SerialForwarders will be started (if selected) and nodes are powered onErase Programs the TinyOS Null application on the selected set of nodes Reset Resets (powers off & on) the selected set of nodes Power On Cuts the USB power supply for the selected nodes Power Off Enables the USB power supply for the selected nodes Start SF Starts a SerialForwarder for the selected nodes Stop SF Stops the SerialForwarder for the selected nodes Start Tracing Stores the serial data output from the nodes in a trace file Stop Tracing Stops storing data in a trace file

By pressing the "Start Tracing" button the serial data output from all nodes are automatically stored to a trace file. This file can be accessed via the job control page by pressing the "Traces" button (with your job checked). If you want to use automatic tracing then it is recommended that during install you select the correct "SF Baudrate" and "SF Version". After the install process, you can then simply click on "Start Tracing" without having to manually start the serial forwards.

Exchanging Data via the Serial Connection

Through the previously described "Install" operation a SerialForwarder for the BaseStation node was started. In order for your tinyos-2.x/apps/Oscilloscope/java/Oscilloscope.java client to connect to this SerialForwarder, you first need to establish an SSH Tunnel to forward the port of the SerialForwarder to your machine. At the very end of the execution log you find the syntax for this SSH command (type it into a shell):

ssh -nNxTL 9013:localhost:9013 twistextern@www.twist.tu-berlin.de

Once you have forwarded the port you can access the remote SerialForwarder like a local one. However, when you start your client application make sure that it attaches to the correct port as specified in the SSH Tunnel (the above command forwards the remote port to your local port 9013). For example, to start the JAVA Oscilloscope client you would first need to set the MOTECOM environment variable as follows:

export MOTECOM=sf@localhost:9013

Now you can start the Oscilloscope GUI by typing:

.\run

in the tinyos-2.x/apps/Oscilloscope/java directory as described in TinyOS 2 tutorial 5.

You should now see an Oscilloscope GUI like the one described in the TinyOS tutorial.

| Attachment | Size |

|---|---|

| TWIST_job_management.png | 104.83 KB |

| TWIST_active_job_control.png | 89.52 KB |

| TWIST_example_job_control.png | 61.46 KB |

Using cURL for automated control

cURL is a command line tool that can, among other things, transfer files and POST web forms via HTTPS. It can thus be used to automate sequences of operations on the testbed, such as installing an image or powering a node off. Before you can actually control your job you need to authenticate via cURL (Step 1) and find you job ID (Step 2). Afterwards you can control your job (Step 3) and download traces (Step 4) associated with your job ID. The following steps list the relevant cURL commands.

Step 1: Authenticate

Use the following format to authenticate and store the secure cookie for the future requests (replace YOUR_USER_NAME and YOUR_PASSWORD with your username and password, respectively):

curl -L -k --cookie /tmp/cookies.txt --cookie-jar /tmp/cookies.txt -d 'username=YOUR_USER_NAME' -d 'password=YOUR_PASSWORD' -d 'commit=Sign in' https://www.twist.tu-berlin.de:8000/__login__

Note that all data fields have to be URL encoded either implicitly using --data-urlencode or explicitly (in case you have special characters in username/password)

Step 2: Find the job_id

You need to know the job_id before you can use curl to control it. This can also be done by fetching and parsing the jobs page with cURL, maybe passing the output through "tidy"

curl -L -k --cookie /tmp/cookies.txt --cookie-jar /tmp/cookies.txt https://www.twist.tu-berlin.de:8000/jobs | tidy

Step 3: Control

The following is a list of examples on how to control a job. Make sure that you replace the job_id and node IDs.

-

Erase - For job_id 346, erase nodes 12 and 13:

curl -k --cookie /tmp/cookies.txt --cookie-jar /tmp/cookies.txt -F __nevow_form__=controlJob -F job_id=346 -F ctrl.grp1.nodes="12 13" -F erase=Erase https://www.twist.tu-berlin.de:8000/jobs/control

-

Install - For job_id 346, install TestSerialBandwidth on nodes 12 and 13 and start serial forwarders:

curl -k --cookie /tmp/cookies.txt --cookie-jar /tmp/cookies.txt -F __nevow_form__=controlJob -F job_id=346 -F ctrl.grp1.nodes="12 13" -F ctrl.grp1.image=@/home/hanjo/tos/tinyos-2.x/apps/tests/TestSerialBandwidth/build/telosb/main.exe -F ctrl.grp1.sfversion=2 -F ctrl.grp1.sfspeed=115200 -F install=Install https://www.twist.tu-berlin.de:8000/jobs/control

-

Power Off - For job_id 346, power off nodes 12 and 13:

curl -k --cookie /tmp/cookies.txt --cookie-jar /tmp/cookies.txt -F __nevow_form__=controlJob -F job_id=346 -F ctrl.grp1.nodes="12 13" -F 'power_off=Power Off' https://www.twist.tu-berlin.de:8000/jobs/control

-

Power On - For job_id 346, power on nodes 12 and 13:

curl -k --cookie /tmp/cookies.txt --cookie-jar /tmp/cookies.txt -F __nevow_form__=controlJob -F job_id=346 -F ctrl.grp1.nodes="12 13" -F 'power_on=Power On' https://www.twist.tu-berlin.de:8000/jobs/control

-

Start Tracing - For job_id 346, start tracing on nodes 12 and 13:

curl -k --cookie /tmp/cookies.txt --cookie-jar /tmp/cookies.txt -F __nevow_form__=controlJob -F job_id=346 -F ctrl.grp1.nodes="12 13" -F 'start_tracing=Start Tracing' https://www.twist.tu-berlin.de:8000/jobs/control

-

Stop Tracing - For job_id 346, stop tracing on nodes 12 and 13:

curl -k --cookie /tmp/cookies.txt --cookie-jar /tmp/cookies.txt -F __nevow_form__=controlJob -F job_id=346 -F ctrl.grp1.nodes="12 13" -F 'stop_tracing=Stop Tracing' https://www.twist.tu-berlin.de:8000/jobs/control

Step 4: Collect data

To collect the specific trace file from archived job 336

curl -g -k --cookie /tmp/cookies.txt --cookie-jar /tmp/cookies.txt -d 'job_id=339' -d 'trace_name=trace_20080507_114824.0.txt.gz' -o trace_20080507_114824.0.txt.gz https://www.twist.tu-berlin.de:8000/jobs/archive/traces/download

Hardware and testbed lay-out

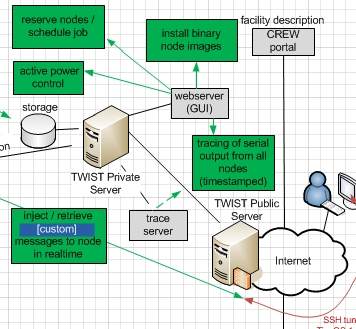

The TKN Wireless Indoor Sensor network Testbed (TWIST), developed by the Telecommunication Networks Group (TKN) at the Technische Universität Berlin, is a scalable and flexible testbed architecture for experimenting with wireless sensor network applications in an indoor setting. It provides basic services like node configuration, network-wide programming, out-of-band extraction of debug data and gathering of application data, as well as several novel features:

- experiments with heterogeneous node platforms

- support for flat and hierarchical setups

- active power supply control of the nodes

The self-configuration capability, the use of hardware with standardized interfaces and open-source software makes the TWIST architecture scalable, affordable, and easily replicable. The TWIST architecture was published in this paper.

The TWIST instance deployed at the TKN group is one of the largest academic testbeds for indoor deployment scenarios. It spans the three floors of the FT building at the TU Berlin campus, resulting in more than 1500 square meters of instrumented office space. Currently the setup is populated with two sensor node platforms:

- 102 TmoteSky nodes, which are specified in detail here.

- 102 eyesIFXv2 nodes; this platform is an outcome of the EU IST EYES project. The platform is based on an MSP430 MCU and the TDA5250 transceiver, which operates in the 868 MHz ISM band using ASK/FSK modulation with data-rates up to 64 Kbps. A summary of the platform's hardware components is given, for example, in this paper.

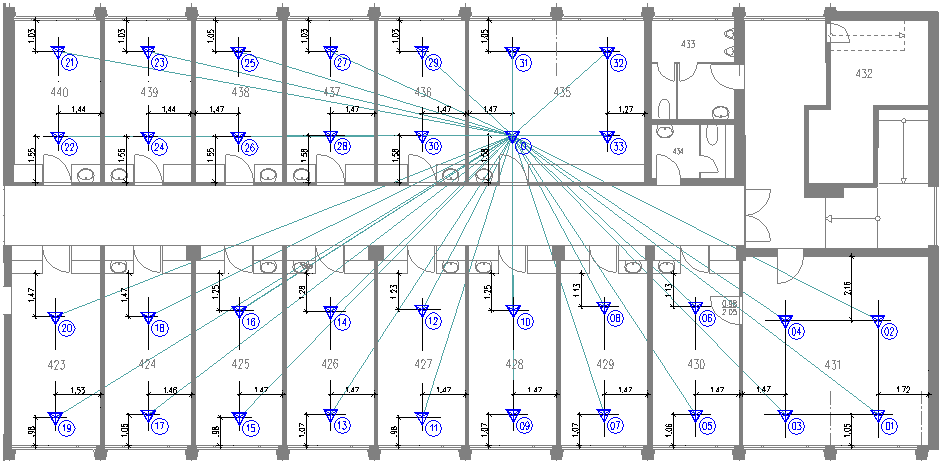

In the small rooms, two nodes of each platform are deployed, while the larger ones have four nodes. The setup results in a fairly regular grid deployment pattern with intra node distance of 3m. The following shows the node placement on the 4th floor of the building (floors 3 and 2 have a very similar layout):

The testbed architecture can be divided into three tiers. The sensor nodes form the lowest tier, they are attached to the ceiling as visualized in the following figure, which shows a Tmote Sky and an eyesIFXv2 node in one of the office rooms:

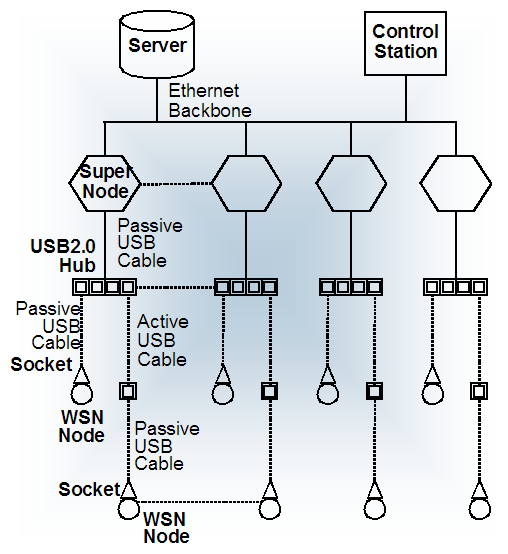

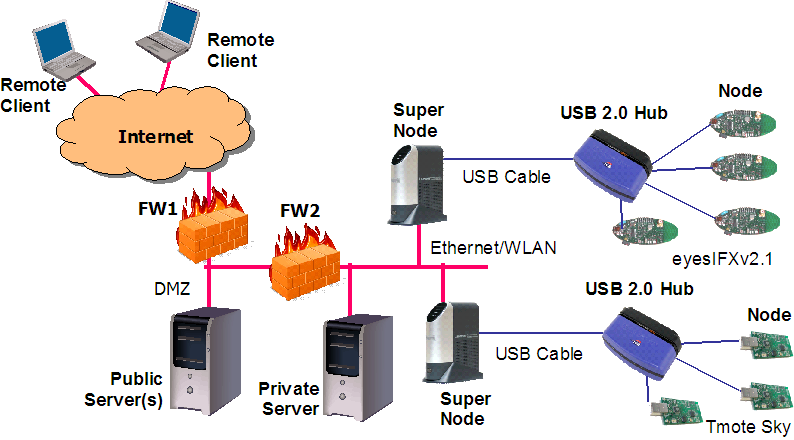

Sensor nodes are connected via USB cabling and USB hubs to the testbed infrastructure. If TWIST only relied on the USB infrastructure, it would have been limited to 127 USB devices (both hubs and sensor nodes) with a maximum distance of 30 m between the control station and the sensor nodes (achieved by daisy-chaining of up to 5 USB hubs). Therefore the TWIST architecture includes a second tier: so-called "super nodes" which are able to interface with the previously described USB infrastructure. We are using the Linksys Network Storage Link for USB2.0 (NSLU2) device as super nodes as depicted in the following picture:

The third and last tier of the architecture is the server and the control stations which interact with the super nodes using the testbed backbone. The server, among other things, implements a PostgreSQL database that stores a number of tables including configuration data like the registered nodes. It also provides remote access via a webinterface. The following figure provides a general overview of the TWIST hardware architecture:

The hardware instantiation of the TWIST hardware architecture at the TKN group is shown in this figure:

| Attachment | Size |

|---|---|

| TWIST_components.png | 115.05 KB |

| TWIST_architecture.png | 70.2 KB |

| TWIST_slug.png | 1.04 MB |

| TWIST_telos.png | 1.01 MB |

| TWIST_floorplan.png | 25.42 KB |

| TWIST_tmote_and_eyesIFXv2.png | 66.14 KB |

System health monitoring

The system health of the TKN TWIST instance is constantly monitored using the CACTI monitoring tools:

You can either use the CACTI System Health Summary, which displays information on the utilization of the testbed server and super node status. The information is updated every 30 min.

Or you can access the CACTI System Health Browser to see more fine-grained information on some particular systems components (please use account name "guest" and password "guest" to get access to the public data.)

w-iLab.t documentation

All documentation for the w-iLab.t Office and Zwijnaarde is now available here.

IMEC sensing engine documentation

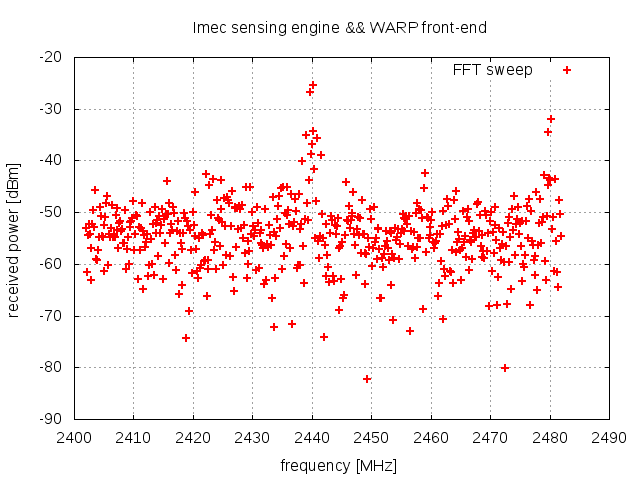

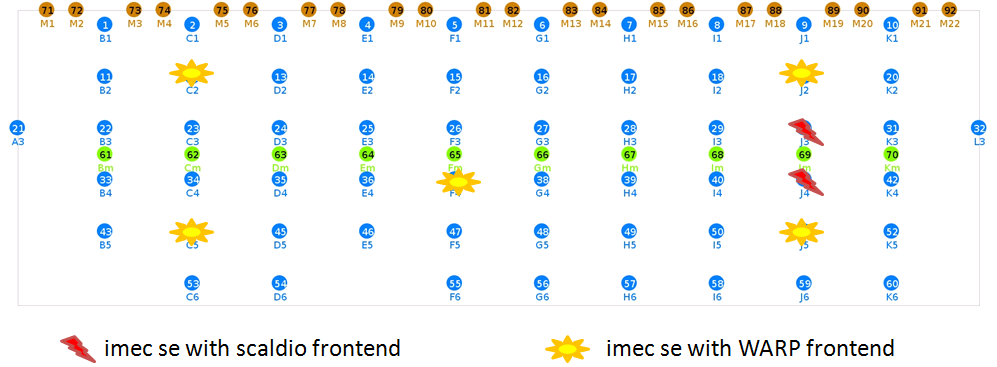

To achieve dynamic spectrum access, sensing techniques are crucial. The IMEC Sensing Engine can add sensing capabilities to radio systems and enable the evaluation of cognitive network solutions. Both hardware and firmware are reconfigurable, allowing to support and evaluate a wide range of sensing applications. A CREW application programming interface (API) is implemented as a Linux library, to ease the writing of new applications in a standard way. Currently, eight prototypes are deployed in the CREW w-iLab.t test-bed. These are connected by means of an USB interface to their corresponding Zotac nodes. Figure 1 shows an unpackaged version of the IMEC sensing engine with scaldio frontend.

Figure 1. Unpackaged IMEC sensing engine with a Scaldio-2B wide band front-end.

At present seven units are deployed in the w-iLab.t Zwijnaarde testbed and one is deployed in the w-iLab.t office testbed. An introduction of how to access the IMEC sensing nodes in the CREW w-iLab.t facility is available on following pages:

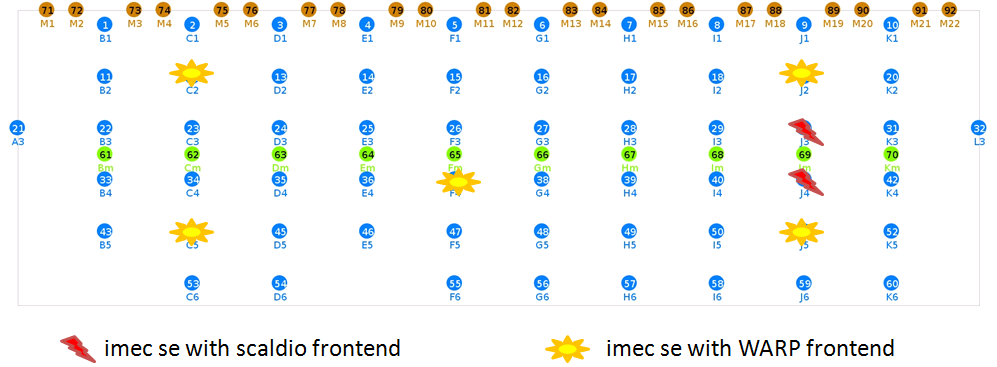

Figure 2. Deployment of IMEC sensing engine in the CREW Zwijnaarde w-iLab-t test-bed

The next sections focus on developing firmware for the IMEC sensing engine. Additional information can be found in following documents:

| Attachment | Size |

|---|---|

| SensingPrototypes-20110822.pdf | 880.07 KB |

| SensingEngine_UserManual_CREW.pdf | 1.43 MB |

| SensingEngineScaldio.JPG | 24.01 KB |

| 20140114_CREW_training_days_imecse_v1.0.pdf | 1.01 MB |

Hardware overview

Different hardware realizations of the IMEC sensing engines exist. This section details the used hardware for IMEC sensing engines, as deployed in the CREW w-iLab-t test-bed.

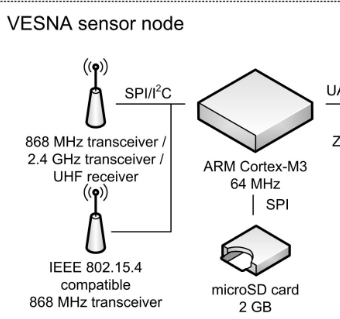

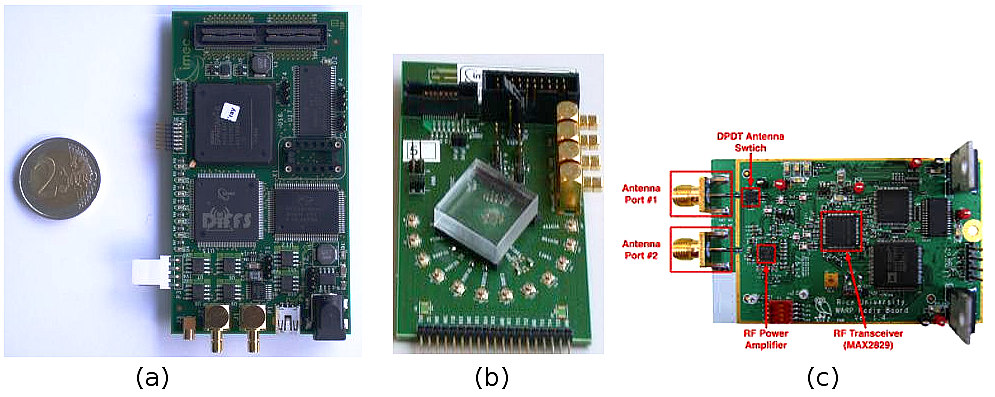

The IMEC sensing engine consists out of three main components: a digital processing part, an analog front end and an antenna. The following paragraphs detail these:

- The SPIDER digital board contains the IMEC DIFFS chip, which is the key component of the sensing real time signal processing (Figure 1.a). This board has two generations: version 1 and 2 respectively. All deployed prototypes for CREW use version 2. Each board has a SPIDER identification number bigger than 128. Specifically this number is derived by adding 128 to the number on the white label of the spider board. These SPIDER identification numbers are available in the test-bed documentation section, eg for the CREW Zwijnaarde w-Ilab-t test bed. An FPGA and on board SRAM allow to reconfigure the SPIDER board. Currently two configurations exist according to the connected front-end type.

- The analog front end board downconverts the signal band of interest to base-band. Two types of front-end are supported for the SPIDER v2 board (*):

- The IMEC Scaldio-2B wide band front-end, that supports sensing between 520MHz till 6.32 GHz (Figure 1.b). Note that an appropriate antenna is needed for the selected band.

- The commercial WARP frontend for the 2.4 GHz and 5.2 GHz ISM bands (Figure 1.c).

(*) The SPIDER board needs to be configured properly to support one of these front-end types.

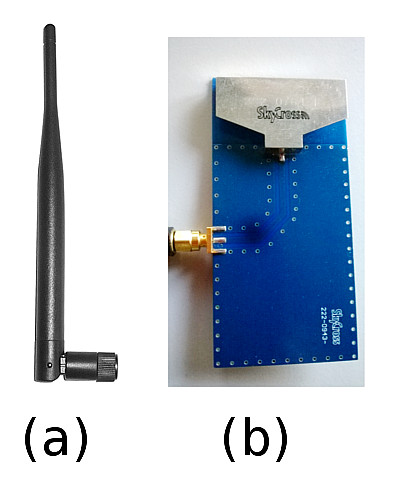

- To enable correct sensing a suitable antenna is required. For the WARP front end a WiFi antenna is typically used (Figure 2.a). For the Scaldio-2B front end a suitable antenna for the frequency band of interest is recommended. Figure 2.b shows a wide band antenna suited for a frequency range between 800 MHz till 2.5 GHz.

Figure 1 : Overview of boards for IMEC sensing engines deployted in CREW (a) SPIDER v2 digital board (b) Imec Scaldio-2B analog front-end (c) WARP analog front-end. A two Euro coin is shown to indicate the size of these boards.

Figure 2 : example antenna's (a) Wifi antenna (b) broadband antenna for 800MHz .. 2.5 GHz range

| Attachment | Size |

|---|---|

| SPIDER-SCALDO2B-WARP.jpg | 112.92 KB |

| SE-antennas.jpg | 32.63 KB |

Software and hardware configuration

To use the IMEC sensing engine the hardware needs to be configured with the CREW API. The corresponding libraries are typically pre-compiled for the Zotac nodes of thw w-iLab.t test bed. Here we briefly illustrates the main configuration steps, as required when updating or extending nodes with Imec sensing capabilities. We show this for the default organisation of the development files, as illustrated in the user manual

Figure 1 : software stack of the IMEC sensing engine

Software configuration

A high level overview of the software is shown in Figure 1. The software runs on Linux. The standard (lib)USB development kit implements the low level communication with the USB port on the SPIDER hardware board. An advantage of this approach is that the hardware abstraction layer (HAL), CREW sensing API (application programming interface) and application can be developed in user mode instead of kernel mode. The configuration is done as follows.

- Start up a terminal session

- Navigate to the top level directory of the development kit. You see two directories : "software" and "spider"

- cd spider/software/usb_interface/SensingEngine/ : this is the point where applications can be developed on top of the CREW sensing API

- source environment.csh : configure the environment with some additional variables